Abstract

In this paper, we study the rate of pointwise approximation for the neural network operators of the Kantorovich type. This result is obtained proving a certain asymptotic expansion for the above operators and then by establishing a Voronovskaja type formula. A central role in the above resuts is played by the truncated algebraic moments of the density functions generated by suitable sigmoidal functions. Furthermore, to improve the rate of convergence, we consider finite linear combinations of the above neural network type operators, and also in the latter case, we obtain a Voronovskaja type theorem. Finally, concrete examples of sigmoidal activation functions have been deeply discussed, together with the case of rectified linear unit (ReLu) activation function, very used in connection with deep neural networks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The theory of the neural network (NN) operators arise since 1992 with the pioneer work of Cardaliaguet and Euvrard [15], and then in the next years, they have been largely studied by several authors under different aspects, see, e.g., [12, 13, 32, 33]; the main advantage of the above theory lies on its connection with the artificial neural networks and their applications to Approximation Theory. For a complete overview concerning applications of neural networks and learning theory, see, e.g., the very complete monograph of Cucker and Zhou [29], and also [9, 37, 42, 43, 46,47,48,49, 53, 56].

A generalization to the \(L^p\)-setting of the above NN operators has been proved in [21]; here, the authors showed that any multivariate \(L^p\)-data can be approximated in the \(L^p\)-norm by means of the NN operators of the Kantorovich-type. However, even if their natural settings are the \(L^p\)-spaces, the above operators converge also pointwise and uniformly when continuous functions are considered.

Such operators are called “of Kantorovich-type”, since their coefficients are defined by means of suitable averages of the functions f that is approximated.

Usually, the activation functions of the NN operators are a sigmodal function ([30]); the reason is that a sigmoidal curve allows to simulate the two states of the biological neurons, that are, the activated and the non-activated ones, see, e.g., [9, 38, 39, 44].

Recently, also a new unbounded activation functions has been investigated, that is the so-called ReLu (rectified linear unit) activation function, defined by the positive part of x, for every \(x \in {\mathbb {R}}\). The ReLu activation function has a linear grow as x goes to \(+\infty \) and it revealed to be very suitable in order to train deep (i.e., the multi-layers) neural networks, see, e.g., [31, 51].

Due to its definition, it is easy to show that the ReLu activation function can be used to express the well-known ramp (or cut) sigmoidal function (see [36]); furthermore, it is also well known that the ramp function can be considered as the activation function in the NN operators. Then, the above relation implies that also the ReLu activation function can be used in NN operators.

The main purpose of this paper is to study the pointwise rate of approximation for the NN operators of the Kantorovich type. The above aim is pursuit by adopting the following strategy: first of all, an asymptotic expansion for the above operators is established, and then, a Voronovskaja type theorem is proved.

The asymptotic formula allows to expand the above approximation operators, when they are evaluated for a sufficiently smooth functions f, in terms of derivatives of the approximated function f evaluated at suitable nodes. A central role in the asymptotic expansion is played by the truncated algebraic moments of the density functions \(\phi _{\sigma }\left( x\right) \); the density function is generated by suitable sigmoidal functions and it can be considered as the kernel of the operators.

The asymptotic formula can be used to prove a Voronovskaja-type theorem (see, e.g., [6,7,8]), from which we establish the rate of pointwise approximation of the operators under investigation.

In general, Voronovskaja formulas are widely studied in Approximation Theory (see, e.g., [4, 10, 34, 54]), both from the qualitative and quantitative point of view (see, e.g., [1, 50]), also for their relations with the saturation phenomenon for families of linear operators (see [27, 35]).

Furthermore, to improve the above convergence results and to obtain a family of NN operators with an higher order of approximation, here, we also considered suitable families of finite linear combination of the above NN operators of the Kantorovich type. Also for these families of operators, we derive asymptotic and Voronovskaja type formulas, following the same steps above described.

Finally, we discuss in details several examples of sigmoidal functions for which the above results hold. In particular, we consider the cases of the logistic and the hyperbolic tangent sigmoidal functions, which are of crucial importance in the theory of learning artificial neural networks, and the case of the sigmoidal functions generated by the central B-splines, which are useful to construct high-order convergence NN operators.

2 Notations and Preliminary Results

In this paper, we denote by \(C\left( I\right) \) the space of all functions \(f\,:\,I\rightarrow {\mathbb {R}}\) which are continuous on \(I:=\left[ -1,1\right] \) and we denote by \(C^{r}\left( I\right) \), \(r\in {\mathbb {N}}^{+}\) the space of functions of \(C\left( I\right) \) which have continuous derivative \(f^{\left( s\right) }\) on I, for every \(1\le s\le r,\,s\in {\mathbb {N}}^{+}.\) The above spaces will be considered endowed by the usual max-norm \(\left\| \cdot \right\| _{\infty }\). Now, denoting by \(\Phi \,:\,{\mathbb {R}}\rightarrow {\mathbb {R}}\) a given function, we can recall the following definitions.

Definition 1

Let \(h \in {\mathbb {N}}\) be fixed. We define the truncated algebraic moment of \(\Phi \) order h, by:

for every \(n\in {\mathbb {N}}^{+}.\)

Definition 2

Let \(h \ge 0\) be fixed. We call discrete absolute moment of \(\Phi \) of order h what follows:

The discrete absolute moments of a given function are widely used tools very useful to establish the convergence of families of linear operators, see, e.g., [6, 7, 55].

It is well known that, if \(\Phi \) is bounded on \({\mathbb {R}}\) and has a sufficiently fast decay to 0 as \(x\rightarrow \pm \infty \), then \(M_{h}\left( \phi \right) <+\infty \), for suitable h, see, e.g., [19].

Now, we recall the definition of sigmoidal function (see, e.g., [26, 30]).

Definition 3

Let \(\sigma \,:\,{\mathbb {R}}\rightarrow {\mathbb {R}}\) be a measurable function. We call \(\sigma \) a sigmoidal function if:

In what follows, we consider non-decreasing sigmoidal functions \(\sigma \) which satisfy the following conditions:

- (\(\Sigma \)1):

-

\(\sigma \left( x\right) -1/2\) is an odd function;

- (\(\Sigma \)2):

-

\(\sigma \in C^{2}\left( {\mathbb {R}}\right) \) is concave for \(x\ge 0\);

- (\(\Sigma \)3):

-

\(\sigma \left( x\right) =O\left( \left| x\right| ^{-\alpha -1}\right) \) as \(x\rightarrow -\infty \) for some \(\alpha >0\).

We now recall the definition of the following density function:

The following lemma summarize some useful properties of the function \(\phi _{\sigma }\).

Lemma 4

(i) \(\phi _{\sigma }\left( x\right) \ge 0\) for every \(x\in {\mathbb {R}}\), with \(\phi _{\sigma }\left( 2\right) >0\), and moreover, \(\lim _{x\rightarrow \pm \infty }\phi _{\sigma }\left( x\right) =0\);

(ii) \(\phi _{\sigma }\left( x\right) \) is an even function;

(iii) \(\phi _{\sigma }\left( x\right) \) is non-decreasing for \(x<0\) and non-increasing for \(x\ge 0\);

(iv) \(\phi _{\sigma }\left( x\right) =O\left( \left| x\right| ^{-1-\alpha }\right) \) as \(x\rightarrow \pm \infty \) where \(\alpha \) is the positive constant of condition \(\left( \Sigma 3\right) \);

(v) Let \(x\in I\) and \(n\in {\mathbb {N}}^{+}.\) Then:

Furthermore, if \(\sigma \) is a sigmoidal function which satisfies \(\left( \Sigma 3\right) \) for \(\alpha >r,\) for some \(r\in {\mathbb {N}}^{+}\), then:

(vi) \(M_{h}\left( \phi _{\sigma }\right) <+\infty \), for every \(0\le h \le r\);

(vii) for every fixed \(\gamma >0\), it turns out that:

uniformly with respect to \(u\in {\mathbb {R}},\) for every \(0\le h \le r;\)

(viii) the sequences \(\left( m_{h}^{n}\left( \phi _{\sigma },u\right) _{n}\right) _{n\in {\mathbb {N}}^{+}}\) of the truncated algebraic moments of order h are equibounded on \({\mathbb {R}}\), for every \(h=0,1,\dots ,r.\)

Remark 5

Note that, if we remove condition \((\Sigma 2)\) on \(\sigma \), and we assume directly that \(\phi _{\sigma }\) satisfies assertion (iii) of Lemma 4, the theory still holds, see, e.g., [25]. The consequence of the above observation is that, we can apply the above theory to \(C^2\) as well as to non-smooth sigmoidal functions, such that the corresponding \(\phi _{\sigma }\) satisfies (iii) of Lemma 4, and \(\phi _{\sigma }(2)>0\).

For a proof of the properties \(\left( i\right) \) - \(\left( v\right) \) see [24]; for the properties \(\left( vi\right) \) - \(\left( viii\right) \), see Lemma 2.6 of [28].

Now, we recall the definition of the NN operators of the Kantorovich type.

Definition 6

Let \(f\,:\,I\rightarrow {\mathbb {R}}\) be a locally integrable function. The Kantorovich-type NN operators \(K_{n}\left( f,\cdot \right) \), activated by a sigmoidal function \(\sigma \), and acting on f, are defined by:

Clearly, for every \(n\in {\mathbb {N}}^{+}\) and \(k\in {\mathbb {Z}}\) such that \(-n\le k\le n-1\), it turns out that \(-1\le \frac{k}{n}<\frac{k+1}{n}\le 1\); the latter inequality, together with condition \(\left( v\right) \) of Lemma 4, implies that the operators are well defined, e.g., in case of locally integrable and bounded functions on I. Furthermore, it turns out that:

for all \(x\in I\).

Remark 7

It is well known that (see, e.g., [21]) the family \(K_{n}\left( f,\cdot \right) \) converges pointwise at each point of continuity of any bounded f. The convergence turns out to be uniform on I if f is continuous on the whole I.

3 Asymptotic Formulas for the NN Operators of the Kantorovich Type

The main aim of this section is to study the order of pointwise approximation for the operators \(K_{n}\) by means of Voronovskaja type theorem.

From now on, when we refer to a sigmoidal function \(\sigma \), we always consider a sigmoidal function \(\sigma \) satisfying conditions \((\Sigma 1)\), \((\Sigma 2)\), and \((\Sigma 3)\) introduced in Sect. 2.

We begin establishing the following asymptotic expansion for the operators \(K_{n}\).

Theorem 8

Let \(\sigma \) be a sigmoidal function witch satisfies assumption \(\left( \Sigma 3\right) \) with \(\alpha >r,\) for some \(r\in {\mathbb {N}}^{+}\). Moreover, let \(f\in C^{r}\left( I\right) \) be fixed. Then, the following asymptotic formula holds:

for every \(x\in I\), where \(m_{0}^{n}\left( \phi _{\sigma },nx\right) >0\), for every \(x\in I\), and \(n\in {\mathbb {N}}^{+}\).

Proof

From the local Taylor formula (for details, see, e.g., [8, p. 283]), we have:

for every \(x,u\in I\), where \(h\left( y\right) \) is a suitable boundedFootnote 1 function, such that \(h\left( y\right) \rightarrow 0\) as \(y\rightarrow 0\). Then, for every fixed \(x\in I\), we can write what follows:

Let us analyze the term \(I_{1}\). Using the binomial theorem and recalling that \({\displaystyle {\displaystyle \sum _{k=-n}^{n-1}\phi _{\sigma }\left( nx-k\right) }}=m_{0}^{n}\left( \phi _{\sigma },nx\right) \), we have that:

so

Now, let us analyze the term \(I_{2}\). Let \(\varepsilon >0\) be fixed. Since \(h\left( y\right) \rightarrow 0\) as \(y\rightarrow 0\), then there exists a \(\gamma >0\), such that \(\left| h\left( y\right) \right| <\varepsilon \) if \(\left| y\right| \le \gamma .\) Hence, we can write:

As concerns \(I_{2,1}\), we observe that, if \(u\in \left[ k/n,\left( k+1\right) /n\right] \), we have:

for every sufficiently large n, and hence:

We now consider \(I_{2,2}\). By the boundedness of \(h\left( y\right) \) and arguing as in \(I_{2,1}\), we get:

for n sufficiently large, by the property \(\left( vii\right) \) of Lemma 4, and the trivial identity:

This concludes the proof. \(\square \)

As a consequence of the previous result, we can prove the following Voronovskaja type theorem.

Theorem 9

Let \(\sigma \) be a sigmoidal function which satisfies assumption \(\left( \Sigma 3\right) \) with \(\alpha >1\). Suppose that, for every \(x\in {\mathbb {R}}\):

where \(m_{h}\in {\mathbb {R}}\), \(\theta _{h}>0,\) \(h=0,1\), and the “o-term” tends to zero uniformly with respect to \(x\in {\mathbb {R}}\). Then, for any \(f\in C^{1}\left( I\right) \), we have:

where \(m_{0}>0\) in view of Lemma 4\(\left( v\right) \).

Proof

From the asymptotic formula of Theorem 8, we have:

and the result follows taking the limit as \(n\rightarrow +\infty \). \(\square \)

Remark 10

The previous theorem shows that the order of pointwise approximation by means of the Kantorovich NN operators is at least of order \(O\left( n^{-1}\right) \) when \(n\rightarrow +\infty \) and \(f\in C^{1}\left( I\right) \).

Moreover, we can observe that, under the assumption of Theorem 9, it is not hard to show that, if \(f\in C^{1}\left( I\right) \), we have:

as \(n\rightarrow +\infty \).

Thus, it seems clear that the best possible order of uniform approximation that can be achieved by the operators \(K_{n}\) is O(1/n), as \(n \rightarrow + \infty \). This shows that, in general, a better order of approximation cannot be obtained; indeed, in the main examples of sigmoidal functions (see Section 5 below) \(m_{1}=0\), then the limit of Theorem 9 gives the exact order of approximation. The order is also less rapid than that achieved in case of the classical NN operators, (see [28]), that is \(O(1/n^{2})\), as \(n \rightarrow +\infty \).

4 High-Order Convergence of NN Operators of the Kantorovich Type

To construct NN operators of the Kantorovich type with higher order of approximation than \(K_{n}\), we adopt the following strategy.

Let \(r \in {\mathbb {N}}^{+},\,r>1\) and \(\alpha _{j}\in {\mathbb {R}}\setminus \left\{ 0\right\} ,\,j=1,\dots ,r\) fixed, such that:

Let us define:

where f is any bounded locally integrable function. It is clear that condition (4) is such that \({\mathcal {K}}_{n}^{r}\) preserves the approximation properties of \(K_{n}\).

Obviously, also in this section, when we refer to \(\sigma \), we always consider sigmoidal functions satisfying \((\sigma i)\), \(i=1, 2, 3\).

Now, we can prove the following asymptotic and Voronovskaja type formula for \({\mathcal {K}}_{n}^{r}\).

Theorem 11

Let \(\sigma \) be a sigmoidal function which satisfies assumption \(\left( \Sigma 3\right) \) with \(\alpha>r>1\), for some \(r\in {\mathbb {N}}^{+}\). Moreover, let \(f\in C^{r}\left( I\right) \) be fixed. Then:

as \(n \rightarrow + \infty \) and for every \(x \in I\). Furthermore, if we suppose that:

for \(n\in {\mathbb {N}}^{+}\) sufficiently large, where \(m_{h}\in {\mathbb {R}}\), such that \(\alpha _{j},\,j=1,\dots ,r\) satisfies the following linear algebraic system:

then

Proof

We simply observe that, proceeding as in Theorem 8, we can write:

hence, the asymptotic formula (5) follows arguing as for \(I_{2,1}\) and \(I_{2,2,}\) in the proof of Theorem 8. Now, to prove the second part of the theorem, it is sufficient to observe that, under the additional assumptions on the truncated algebraic moments, we have:

for \(n\in {\mathbb {N}}^{+}\) sufficiently large, \(x\in I\). Then:

and passing to the limit as \(n\rightarrow +\infty \), the proof of the Voronovskaja formula (8) follows. \(\square \)

The previous theorem shows that the order of pointwise approximation by the NN operators \({\mathcal {K}}_{n}^{r}\left( f,x\right) \) is at least \(O\left( n^{-r}\right) \) as \(n\rightarrow +\infty \) when functions that belong to \(C^{r}\left( I\right) ,\,r \in {\mathbb {N}}\) are approximated. Again, it is quite simple to observe that, under the assumption of Theorem 11 and for \(f\in C^{r}\left( I \right) \), we have:

as \(n\rightarrow +\infty \).

5 Applications to Special Cases

In this section, we discuss the results proved above for some concrete cases of sigmoidal functions.

5.1 Applications with the Logistic Function

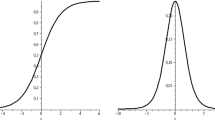

First of all, we consider the case of the Kantorovich NN type operators activated by the well-known logistic function (see, e.g., [20, 40, 41] and Fig. 1):

NN operators activated by logistic functions have been widely studied, e.g., in [12, 32].

Obviously, \(\sigma _{\ell }\) is a smooth function and it satisfies all the assumptions \(\left( \Sigma i\right) ,\,i=1,2,3.\) Furthermore, by its exponential decay to zero as \(x\rightarrow -\infty ,\) condition \(\left( \Sigma 3\right) \) is satisfied for every \(\alpha >0\). Hence, it turns out that \(M_{h}\left( \phi _{\sigma _{\ell }}\right) <+\infty \) for every \(h \ge 0\) in view of Lemma 4, (vi). The above function is very useful in the theory of artificial neural network, since it is used as activation function in neuronal models when training algorithms would applied, see, e.g., [14, 16, 45, 48, 52].

Now, to apply the results proved in the previous sections, the truncated algebraic moments of the function \(\phi _{\sigma _{\ell }}\left( x\right) \) must be computed. In general, it is possible to compute the truncated algebraic moments of a given function exploiting a well-known result of Fourier analysis, i.e., the so-called Poisson summation formula (see, e.g., [11]). In particular, since \(\left( \Sigma 3\right) \) holds for every \(\alpha >0\), by Lemma 4 (iv), we have that \(\phi _{\sigma _{\ell }} \in L^{1}\left( {\mathbb {R}} \right) \), and then, the usual \(L^{1}\)-Fourier transform can be used to apply the above mentioned Poisson summation formula. In particular, following the procedure given in [28], it turns out that \(\phi _{\sigma _{\ell }}\) satisfies assumption (3) with \(m_{0}=1\), \(m_{1}=0\) and \(\theta _{0},\theta _{1}>1\). Then, from Theorem 9, we can obtain what follows.

Corollary 12

Let \(\sigma _{\ell }\left( x\right) \) be the logistic function, and \(f\in C^{1}\left( I\right) \) be fixed. Then, for every \(x\in I,\) we have:

Moreover, \(\phi _{\sigma _{\ell }}\left( x\right) \) does not satisfy assumption (6) of Theorem 11, which, hence, cannot be used to construct the operators \({\mathcal {K}}_{n}^{r}\).

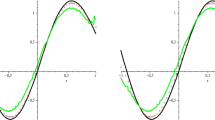

5.2 Applications with Hyperbolic Tangent Sigmoidal Function

In this section, we study the case of the Kantorovich NN type operators activated by the well-known hyperbolic tangent sigmoidal function (see, e.g., [25]):

The graph of \(\sigma _{h}\left( x\right) \) together with its density function \(\phi _{h}\left( x\right) \) is plotted in Fig. 2.

The NN operators activated by the hyperbolic tangent sigmoidal functions have been widely studied, see, e.g., [22, 23, 33]. Again, we can observe that \(\sigma _{h}\) is a smooth function that satisfies \(\left( \Sigma i\right) ,\,i=1,2,3\), it tends to zero exponentially as \(x\rightarrow -\infty \), and so \(\left( \Sigma 3\right) \) holds for all \(\alpha >0\). Now, computing again the truncated algebraic moments of \(\phi _{\sigma _{h}}\) using the Poisson summation formula (as in the case of logistic function), it turns out that \(m_{0}=1\), \(m_{1}=0\), and \(\theta _{0},\theta _{1}>1\) (see [28] again). Hence, we can obtain the following corollary.

Corollary 13

Let \(\sigma _{h}\left( x\right) \) be the hyperbolic tangent sigmoidal function, and \(f\in C^{1}\left( I\right) \) be fixed. Then, for every \(x\in I,\) we have:

Also in this case, assumption (6) of Theorem 11 is not satisfied.

To provide examples of sigmoidal functions useful to construct high-order NN type operators, we can consider what follows.

5.3 Applications with Sigmoidal Functions Generated by B-splines

In [22], the well-known central B-splines of order \(d\ge 1\), defined by (see, e.g., [5, 17, 19, 27, 31]):

have been used to introduce a class of sigmoidal functions. Here, \(\left( x\right) _{+}:=\max \left\{ x,0\right\} \) denotes the “positive part” of \(x\in {\mathbb {R}}\). The sigmoidal function \(\sigma _{M_{d}}\left( x\right) \) associated with the central B-spline \(M_{d}\), is defined by the following formula:

Consequently, the corresponding density function has the following expression:

By simple computations it is easy to prove that, also for \(\sigma _{M_{d}}\), assumptions \(\left( \Sigma i\right) ,\,i=1,2,3\) are satisfied. In particular, since the central B-splines have compact support, then \(\left( \Sigma 3\right) \) is satisfied by \(\sigma _{M_{d}}\left( x\right) \) for every \(\alpha >0\). Exploiting the relation (10), it is easy to obtain that:

where

with i the imaginary unit, then, for n sufficiently large, we obtain that \(m_{0}^{n}\left( \phi _{\sigma _{M_{d}}},x\right) =1\) and \(m_{1}^{n}\left( \phi _{\sigma _{M_{d}}},x\right) =0\). Hence:

Corollary 14

Let \(\sigma _{M_{d}}\left( x\right) ,\,d\in {\mathbb {N}}^{+},\) be the sigmoidal function generated by the central B-spline \(M_{d}\left( x\right) \), and \(f\in C^{1}\left( I\right) \) be fixed. Then, for every \(x\in I,\) we have:

Remark 15

As showed in Remark 5, the previous results still hold also in case of the well-known (not differentiable) ramp function:

since the corresponding density function satisfies the condition (iii) of Lemma 4.

Note that (see [18, 26]) the sigmoidal function \(\sigma _{M_1}(3\, \cdot )\) coincides with the ramp function \(\sigma _R\). Then, if we recall the definition of the well-known ReLu activation function (see, e.g., [36]):

it turns out that:

then the corresponding density function can be expressed in term of ReLu activation function:

As a consequence of the above relation, the NN operators \(K_n^{\sigma _{M_1}(3\, \cdot )}\) can be considered as an NN activated by the above linear combination of ReLu activation function. For more details concerning the usefulness of \(\psi _{ReLu}\), see, e.g., [2, 3].

As showed above, the truncated algebraic moments of \(\phi _{\sigma _{M_{d}}}\) are exactly equal to suitable constants. Then, the sigmoidal functions \(\sigma _{M_{d}}\) can be used to generate the high-order convergence operators \({\mathcal {K}}_{n}^{r}\). Here, we consider the linear combination of \(\alpha _{j}^{\left( 1\right) },\,j=1,2\), and of \(\alpha _{j}^{\left( 2\right) },\,j=1,2,3\), which satisfy respectively:

and

In this way, we obtain the following operators:

Thus, we obtain:

Corollary 16

Let \(\sigma _{M_{d}}\left( x\right) ,\,d\in {\mathbb {N}}^{+},\) be the sigmoidal function generated by the central B-spline \(M_{d}\left( x\right) \), and let \(x \in I\) be fixed.

For any \(f\in C^{2}\left( I\right) \), there holds:

while for any \(f\in C^{3}\left( I\right) \), we have:

Clearly, an analogous of Corollary 16 can be reformulated also for a finite linear combination of Kantorovich NN type operators \(K_{n}\) with \(r>3\) to achieve a faster convergence.

Notes

Note that the boundedness of h derives from the boundedness of f and its derivatives.

References

Adell, J.A., Cardenas-Morales, D.: Quantitative generalized Voronovskaja’s formulae for Bernstein polynomials. J. Approx. Theory 231, 41–52 (2018)

Agarap, A.F.: Deep Learning using Rectified Linear Units (ReLU). arXiv:1803.08375 (2018)

Agostinelli, F., Hoffman, M., Sadowski, P., Baldi, P.: Learning Activation Functions to Improve Deep Neural Networks. arXiv:1412.6830v3 (2015)

Aral, A., Acar, T., Rasa, I.: The new forms of Voronovskaya’s theorem in weighted spaces. Positivity 20(1), 25–40 (2016)

Asdrubali, F., Baldinelli, G., Bianchi, F., Costarelli, D., Rotili, A., Seracini, M., Vinti, G.: Detection of thermal bridges from thermographic images by means of image processing approximation algorithms. Appl. Math. Comput. 317, 160–171 (2018)

Bardaro, C., Mantellini, I.: Voronovskaja formulae for Kantorovich generalized sampling series. Int. J. Pure Appl. Math. 62(3), 247–262 (2010)

Bardaro, C., Mantellini, I.: Approximation properties for linear combinations of moment type operators. Comput. Math. Appl. 62(5), 2304–2313 (2011)

Bardaro, C., Mantellini, I.: Asymptotic formulae for linear combinations of generalized sampling operators. Z. Anal. Ihre Anwend. 32(3), 279–298 (2013)

Barron, A.R., Klusowski, J.M.: Uniform approximation by neural networks activated by first and second order ridge splines. arXiv preprint arXiv:1607.07819 (2016)

Boccuto, A., Bukhvalov, A.V., Sambucini, A.R.: Some inequalities in classical spaces with mixed norms. Positivity 6, 393–411 (2002)

Butzer, P.L., Nessel, R.J.: Fourier Analysis and Approximation I. Academic Press, New York (1971)

Cao, F., Chen, Z.: The approximation operators with sigmoidal functions. Comput. Math. Appl. 58(4), 758–765 (2009)

Cao, F., Chen, Z.: Scattered data approximation by neural networks operators. Neurocomputing 190, 237–242 (2016)

Cao, F., Liu, B., Park, D.S.: Image classification based on effective extreme learning machine. Neurocomputing 102, 90–97 (2013)

Cardaliaguet, P., Euvrard, G.: Approximation of a function and its derivative with a neural network. Neural Netw. 5(2), 207–220 (1992)

Chui, C. K., Mhaskar, H. N.: Deep nets for local manifold learning. arXiv preprint arXiv:1607.07110 (2016)

Coroianu, L., Gal, S.G.: Approximation by truncated max-product operators of Kantorovich-type based on generalized \((\varphi,\psi )\)-kernels. Math. Methods Appl. Sci. 41(17), 7971–7984 (2018)

Costarelli, D.: Approximate solutions of Volterra integral equations by an interpolation method based on ramp functions. Comput. Appl. Math. 38(4) (article 159) (2019)

Costarelli, D., Minotti, A.M., Vinti, G.: Approximation of discontinuous signals by sampling Kantorovich series. J. Math. Anal. Appl. 450(2), 1083–1103 (2017)

Costarelli, D., Sambucini, A.R., Vinti, G.: Convergence in Orlicz spaces by means of the multivariate max-product neural network operators of the Kantorovich type and applications. Neural Comput. Appl. 31, 5069–5078 (2019)

Costarelli, D., Spigler, R.: Convergence of a family of neural network operators of the Kantorovich type. J. Approx. Theory 185, 80–90 (2014)

Costarelli, D., Spigler, R.: Approximation by series of sigmoidal functions with applications to neural networks. Ann. Mat. Pura Appl. 194(1), 289–306 (2015)

Costarelli, D., Vinti, G.: Pointwise and uniform approximation by multivariate neural network operators of the max-product type. Neural Netw. 81, 81–90 (2016)

Costarelli, D., Vinti, G.: Convergence for a family of neural network operators in Orlicz spaces. Math. Nachr. 290(2–3), 226–235 (2017)

Costarelli, D., Vinti, G.: Convergence results for a family of Kantorovich max-product neural network operators in a multivariate setting. Math. Slov. 67(6), 1469–1480 (2017)

Costarelli, D., Vinti, G.: Saturation classes for max-product neural network operators activated by sigmoidal functions. Results Math. 72(3), 1555–1569 (2017)

Costarelli, D., Vinti, G.: Inverse results of approximation and the saturation order for the sampling Kantorovich series. J. Approx. Theory 242, 64–82 (2019)

Costarelli, D., Vinti, G.: Voronovskaja formulas for high order convergence neural network operator with sigmoidal functions. Mediterr. J. Math. 17 (article numb. 77), https://doi.org/10.1007/s00009-020-01513-7 (2020)

Cucker, F., Zhou, D.X.: Learning Theory an Approximation Theory Viewpoint. Cambridge University Press, Cambridge (2007)

Cybenko, G.: Approximation by superpositions of a sigmoidal function. Math. Control Signals Syst. 2, 303–314 (1989)

Eckle, K., Schmidt-Hieber, J.: A comparison of deep networks with ReLU activation function and linear spline-type methods. Neural Netw. 110, 232–242 (2019)

Fard, S.P., Zainuddin, Z.: The universal approximation capabilities of cylindrical approximate identity neural networks. Arab. J. Sci. Eng. 1–8 (2016)

Fard, S.P., Zainuddin, Z.: Theoretical analyses of the universal approximation capability of a class of higher order neural networks based on approximate identity. In: Nature-Inspired Computing: Concepts, Methodologies, Tools, and Applications (in print). https://doi.org/10.4018/978-1-5225-0788-8.ch055 (2016)

Finta, Z.: On generalized Voronovskaja theorem for Bernstein polynomials. Carpathian J. Math. 28(2), 231–238 (2012)

Gavrea, I., Ivan, M.: The Bernstein Voronovskaja-type theorem for positive linear approximation operators. J. Approx. Theory 192, 291–296 (2015)

Goebbels, S.: On sharpness of error bounds for single hidden layer feedforward neural networks. arXiv:1811.05199 (2018)

Gripenberg, G.: Approximation by neural network with a bounded number of nodes at each level. J. Approx. Theory 122(2), 260–266 (2003)

Guliyev, N.J., Ismailov, V.E.: On the approximation by single hidden layer feedforward neural networks with fixed weights. Neural Netw. 98, 296–304 (2018)

Guliyev, N.J., Ismailov, V.E.: Approximation capability of two hidden layer feedforward neural networks with fixed weights. Neurocomputing 316, 262–269 (2018)

Iliev, A., Kyurkchiev, N.: On the Hausdor distance between the Heaviside function and some transmuted activation functions. Math. Model. Appl. 2(1), 1–5 (2016)

Iliev, A., Kyurkchiev, N., Markov, S.: On the approximation of the cut and step functions by logistic and Gompertz functions. BIOMATH 4(2), 1510101 (2015)

Ismailov, V.E.: On the approximation by neural networks with bounded number of neurons in hidden layers. J. Math. Anal. Appl. 417(2), 963–969 (2014)

Kainen, P.C., Kurkovà, V.: An integral upper bound for neural network approximation. Neural Comput. 21, 2970–2989 (2009)

Kurkovà, V.: Lower bounds on complexity of shallow perceptron networks. Eng. Appl. Neural Netw. Commun. Comput. Inform. Sci. 629, 283–294 (2016)

Lin, S., Zeng, J., Zhang, X.: Constructive neural network learning. arXiv preprint arXiv:1605.00079 (2016)

Maiorov, V., Meir, R.: On the near optimality of the stochastic approximation of smooth functions by neural networks. Adv. Comput. Math. 13(1), 79–103 (2000)

Makovoz, Y.: Uniform approximation by neural networks. J. Approx. Theory 95(2), 215–228 (1998)

Mhaskar, H., Poggio, T.: Deep vs. shallow networks: an approximation theory perspective. Anal. Appl. 14 (6), 829–848 (2016)

Moritani, Y., Ogihara, N.: A hypothetical neural network model for generation of human precision grip. Neural Netw. 110, 213–224 (2019)

Nasaireh, F., Rasa, I.: Another look at Voronovskaja type formulas. J. Math. Inequal. 12(1), 95–105 (2018)

Petersen, P., Voigtlaender, F.: Optimal approximation of piecewise smooth functions using deep ReLU neural networks. Neural Netw. 108, 296–330 (2018)

Schmidhuber, J.: Deep learning in neural networks: an overview. Neural Netw. 61, 85–117 (2015)

Smale, S., Zhou, D.X.: Learning theory estimates via integral operators and their approximations. Construct. Approx. 26(2), 153–172 (2007)

Ulusoy, G., Acar, T.: q-Voronovskaya type theorems for q-Baskakov operators. Math. Methods Appl. Sci. (2015). https://doi.org/10.1002/mma.3784

Vinti, G., Zampogni, L.: A unifying approach to convergence of linear sampling type operators in Orlicz spaces. Adv. Differ. Equ. 16(5–6), 573–600 (2011)

Zhang, Y., Wu, J., Cai, Z., Du, B., Yu, P.S.: An unsupervised parameter learning model for RVFL neural network. Neural Netw. 112, 85–97 (2019)

Acknowledgements

The authors are members of the Gruppo Nazionale per l’Analisi Matematica, la Probabilità e le loro Applicazioni (GNAMPA) of the Istituto Nazionale di Alta Matematica (INdAM), and of the network RITA (Research ITalian network on Approximation). The second author has been partially supported within the 2019 GNAMPA-INdAM Project “Metodi di analisi reale per l’approssimazione attraverso operatori discreti e applicazioni”, while the third author within the projects: (1) Ricerca di Base 2017 dell’Università degli Studi di Perugia-“Metodi di teoria degli operatori e di Analisi Reale per problemi di approssimazione ed applicazioni”, (2) Ricerca di Base 2018 dell’Università degli Studi di Perugia-”Metodi di Teoria dell’Approssimazione, Analisi Reale, Analisi Nonlineare e loro Applicazioni”, and (3) “Metodi e processi innovativi per lo sviluppo di una banca di immagini mediche per fini diagnostici” funded by the Fondazione Cassa di Risparmio di Perugia, 2018.

Funding

Open access funding provided by Universitá degli Studi di Perugia within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cantarini, M., Costarelli, D. & Vinti, G. Asymptotic Expansion for Neural Network Operators of the Kantorovich Type and High Order of Approximation. Mediterr. J. Math. 18, 66 (2021). https://doi.org/10.1007/s00009-021-01717-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00009-021-01717-5

Keywords

- Sigmoidal functions

- neural networks operators

- asymptotic formula

- Voronovskaja formula

- high-order convergence

- truncated algebraic moment