Abstract

We prove that each generalized (in the sense of Miculescu and Mihail) IFS consisting of contractive maps generates the unique generalized Hutchinson measure. This result extends the earlier result due to Miculescu in which the assertion is proved under certain additional contractive assumptions.

Similar content being viewed by others

1 Introduction

In the last decade, various aspects of the theory of classical iterated iterated function systems (IFSs) has been extended to the framework of generalized IFSs (GIFSs for short), which were introduced in 2008 by Miculescu and Mihail (see [13, 15, 16]). Instead of selfmaps of a given metric space X, GIFSs consist of maps defined on the finite Cartesian product \(X^m\) with values in X. It turned out that many classical results for IFSs have natural counterparts in the GIFSs setting (see, e.g., [17, 23, 24]). In particular, GIFSs consisting of contractive maps generate unique compact sets which can be called as their attractors. On the other hand, the class of GIFSs’ attractors is essentially wider than the class of IFSs’ attractors (see [10, 22]).

One of a very important parts of the IFS theory bases on the existence of the so-called Hutchinson measure, which is the unique measure invariant w.r.t. the so-called Markov operator generated by the underlying IFS (see, e.g., [5]).

Miculescu and Mihail in [12, 14] proved that a GIFS \(\mathcal {F}\) generates a counterpart of the Hutchinson measure, provided that the underlying maps from \(\mathcal {F}\) satisfy some additional contractive assumptions (a bit different approach to the generalized IFSs and their Hutchinson measures were considered in [19]). Using a completely different approach, in the main result of our paper we will prove the same assertion but without this additional requirements. We also give an example which shows that the original proof from [14] cannot work in the general case. Presented reasonings are inspired by the ones from [8] and they use the machinery of the code spaces for GIFSs from [24].

2 Preliminaries

2.1 Generalized IFSs and a counterpart of the Hutchinson–Barnsley theorem

Assume that (X, d) is a metric space and \(m\in \mathbb {N}\). Consider the Cartesian product \(X^m\) as a metric space with the maximum metric \(d_m\). By \(\mathbb {K}(X)\) we will denote the hyperspace of all nonempty and compact subsets of X, endowed with the Hausdorff–Pompeiu metric h.

Definition 2.1

By a generalized iterated function system of order m (GIFS for short) we will mean any finite family \(\mathcal {F}\) of continuous maps defined on \(X^m\) and with values in X.

Each GIFS \(\mathcal {F}=\{f_1,\ldots ,f_n\}\) generates the map \(\mathcal {F}:\mathbb {K}(X)^m\rightarrow \mathbb {K}(X)\) which adjust to every m-tuple \((K_1,\ldots ,K_m)\in \mathbb {K}(X)^m\), the value

The map \(\mathcal {F}\) will be called the generalized Hutchinson operator for \(\mathcal {F}\) (using the same symbol as for a GIFS itself does not lead to confusions).

Definition 2.2

A map \(f:X^m\rightarrow X\) will be called:

-

(i)

a generalized Banach contraction, if its Lipschitz constant \({\text {Lip}}(f)<1\).

-

(ii)

a generalized Matkowski contraction, if there is a nondecreasing function \(\varphi :[0,\infty )\rightarrow [0,\infty )\) such that the sequence of iterations \(\varphi ^{(n)}(t)\rightarrow 0\) for every \(t\ge 0\), and additionally

$$\begin{aligned} \forall _{x,y\in X^m}\;d(f(x),f(y))\le \varphi (d_m(x,y)) \end{aligned}$$

Clearly, each generalized Banach contraction is Matkowski, but the converse is not true. If \(m=1\) then we arrive to the classical notions of Banach contraction and Matkowski contraction. As was proved by Matkowski [11], each Matkowski contraction satisfies the thesis of the Banach fixed point theorem (and this is one of the strongest generalization of the Banach theorem; for example, it implies the ones due to Browder, Rakotch or Edelstein—see [6] for a deep discussion on various contractive conditions). This assertion can be extended to the considered “generalized” case (see [4, Theorem 2.1] and also [13, 16, 23] for more restrictive cases).

Proposition 2.3

[4, Theorem 2.1] Assume that \(f:X^m\rightarrow X\) is a generalized Matkowski contraction and the space X is complete. Then there exists the unique point \(x_*\in X\) such that

Moreover, for every \(x_1,\ldots ,x_m\in X\), the sequence \((x_k)\) defined inductively by

converges to \(x_*\).

The following result is a counterpart of the classical Hutchinson result (see, e.g., [2] and [5]) on generating attractors by IFSs (see [24, Theorem 1.4 and Remark 1.5], [4, Theorem 2.16] and also [13, 16, 23] for more restrictive cases). It follows from Proposition 2.3.

Theorem 2.4

Assume that \(\mathcal {F}=\{f_1,\ldots ,f_n\}\) is a GIFS on a complete space X consisting of generalized Matkowski contractions. Then there is the unique \(A_\mathcal {F}\in \mathbb {K}(X)\) such that

Moreover, for every sets \(K_1,\ldots ,K_m\in \mathbb {K}(X)\), the sequence \((K_k)\) defined by \(K_{k+m}:=\mathcal {F}(K_k,\ldots ,K_{k+m-1})\), \(k\in \mathbb {N}\), converges to \(A_\mathcal {F}\) w.r.t. the Hausdorff–Pompeiu metric.

Definition 2.5

The set \(A_\mathcal {F}\) from the thesis of the above result will be called the attractor of \(\mathcal {F}\).

As was remarked, the class of GIFSs’ attractors is essentially wider than the class of IFSs’ attractors. In fact, there are sets (even subsets of the real line) which are attractors of GIFSs of order 2 consisting of generalized Banach contractions, and which are not homeomorphic to the attractor of IFS consisting of Matkowski contractions—see [9].

2.2 Code space for GIFSs and the projection map

Here we recall the notion of the code space from [24] (see [17] for alternative, yet earlier, version) and the result which shows that the relationships between a GIFS and its code space are similar as in the classical IFS case. Later we will use this machinery in the proof of our main result.

Fix natural numbers n, m, and define sets \(\Omega _k\), \(k\in \mathbb {N}\), in the following inductive way:

Then for every \(k\in \mathbb {N}\), define

and, finally,

For every \(\alpha =(\alpha _k),\;\beta =(\beta _k)\in \Omega \), set

where \(d_k\) is the discrete metric on \(\Omega _k\). It turns out that d is a metric on \(\Omega \) and \((\Omega ,d)\) is compact.

As the construction of \(\Omega \) depends strongly on n and m, we should add these values as indexes. For simplicity of notations, we will not do it—this will not lead to any confusion.

Definition 2.6

The space \((\Omega ,d)\) is called the code space. If \(\mathcal {F}\) is a GIFS of order m which consists of n maps, then \((\Omega ,d)\) is called the code space for \(\mathcal {F}\).

Now for \(k\ge 2\), \(\alpha =(\alpha _1,(\alpha _2^1,\ldots ,\alpha _2^m),\ldots ,(\alpha _k^1,\ldots ,\alpha _k^m))\in {}_k\Omega \) and \(i=1,\ldots ,m\), define

Clearly, \(\alpha (i)\in {}_{k-1}\Omega \).

In a similar way we define \(\alpha (i)\) for every \(\alpha \in \Omega \) and \(i=1,\ldots ,m\).

Now for every \(i=1,\ldots ,n\), we define \(\tau _i:\Omega ^m\rightarrow \Omega \) by setting to each \(\alpha ^1=(\alpha ^1_1, \alpha ^1_2,\ldots ),\ldots ,\alpha ^m=(\alpha ^m_1,\alpha ^m_2,\ldots )\in \Omega \), the sequence

The next result gathers basic properties of \(\tau _i\), \(i=1,\ldots ,n\) (see [24, Proposition 2.4]):

Lemma 2.7

In the above framework:

-

(i)

for every \(i=1,\ldots ,n\), \(\alpha ^1,\ldots ,\alpha ^m\in \Omega \) and \(j=1,\ldots ,n\), it holds \(\tau _i(\alpha ^1,\ldots ,\alpha ^m)(j)=\alpha ^j\);

-

(ii)

for every \(i=1,\ldots ,n\), \({\text {Lip}}(\tau _i)\le \frac{m}{m+1}\);

-

(iii)

\(\Omega =\tau _1(\Omega ^m)\cup \cdots \cup \tau _n(\Omega ^m)\), e.g., \(\Omega \) is the attractor of \(\mathcal {T}:=\{\tau _1,\ldots ,\tau _m\}\).

Definition 2.8

The family \(\mathcal {T}=\{\tau _1,\ldots ,\tau _n\}\) is called the canonical GIFS on \(\Omega \).

Now if X is a metric space, then we define metric spaces \(X_k\), \(k\in \mathbb {N}\), according to the inductive formula:

where, at each step, we consider Cartesian product as a metric space with the maximum metric. Clearly, each \(X_k\) is isometric with \(X^{m^k}\).

In a similar way, for a set \(D\subset X\), we define the sequence \(D_k,\;k\in \mathbb {N}\), of subsets of \(X_k\), \(k\in \mathbb {N}\).

Now assume that \(\mathcal {F}=\{f_1,\ldots ,f_n\}\) is a GIFS on a space X of order m. By induction, we will define the families \(\mathcal {F}_k=\{f_\alpha :X_k\rightarrow X:\alpha \in {}_k\Omega \}\), \(k\in \mathbb {N}\).

The family \(\mathcal {F}_1\) is simply the GIFS \(\mathcal {F}\) itself. Now assume that we defined \(\mathcal {F}_k\). For every \(\alpha =(\alpha _1,\ldots ,\alpha _{k+1})\in {}_{k+1}\Omega \) and \(x=(x_1,\ldots ,x_m)\in X_{k+1}\), define

and set \(\mathcal {F}_{k+1}:=\{f_\alpha :\alpha \in {}_k\Omega \}\).

The following result shows the promised relationships between a GIFS and its code space (see [4, Lemma 4.9] [24, Lemma 3.4, Theorem 3.7, Theorem 3.8 and Theorem 3.11] and [4]). We just mention here the important, from the perspective of future reasonings, ones.

Here and later on, if \(\alpha =(\alpha _i)\) is any sequence and \(k\in \mathbb {N}\), then by \(\alpha _{\vert _k}\) we denote the restriction of \(\alpha \) to the first k elements, that is, \(\alpha _{\vert _k}:=(\alpha _1,\ldots ,\alpha _k)\).

Theorem 2.9

Assume that \(\mathcal {F}\) is GIFS on a complete metric space X consisting of generalized Matkowski contractions. Then the following conditions hold:

-

(i)

for every compact set \(C\subset X\), there is a closed and bounded set \(D\subset X\) so that \(C\subset D\) and \(\mathcal {F}(D,\ldots ,D)\subset D\);

-

(ii)

for every closed and bounded set \(D\subset X\) so that \(\mathcal {F}(D,\ldots ,D)\subset D\), and every \(\alpha \in \Omega \), the sequence \((f_{\alpha _{\vert k}}(D_k))\) satisfies the following conditions:

-

(iia)

\((f_{\alpha _{\vert k}}(D_k))\) is a decreasing sequence of sets with diameter tending to 0;

-

(iib)

\(\bigcap _{k\in \mathbb {N}}f_{\alpha _{\vert k}}(D_k)\) is a singleton and its unique element does not depend on D; denote it by \(\pi (\alpha )\);

-

(iia)

-

(iii)

the mapping \(\pi :\Omega _\mathcal {F}\mapsto X\) has the following properties:

-

(iiia)

\(\pi \) is continuous;

-

(iiib)

\(\pi (\Omega )=A_\mathcal {F}\);

-

(iiic)

for every \(i=1,\ldots ,n\), \(f_i\circ \pi _1=\pi \circ \tau _i\), where \(\pi _1:\Omega ^m\mapsto X_1\) is defined by \(\pi _1(\alpha ^1,\ldots ,\alpha ^m):=(\pi (\alpha ^1),\ldots ,\pi (\alpha ^m))\).

-

(iiia)

2.3 Space of probability measures, Monge–Kantorovich metric and push-forward measures

Let (X, d) be a metric space. By \(\mathcal {P}(X)\) let us denote the space of all Borel probability measures \(\mu \) on X with compact support, that is, for which the following set is compact:

Note that the equivalent definition of the support is: \({\text {supp}}(\mu )=\bigcap \{H\subset X:H \text{ is } \text{ closed } \text{ and } \mu (H)=1\}\).

For \(\mu ,\nu \in \mathcal {P}(X)\), define

It turns out that \(d_{MK}\) is metric (called the Monge–Kantorovich or Hutchinson metric), which generates the topology of weak convergence (see [3, 7] or [8] for details; note that we restrict our discussion to relatively restricted case of measures with compact support).

We will also use the notion of push-forward measure. Additionally, let Y be a metric space and \(w:X\rightarrow Y\) be continuous. For \(\mu \in \mathcal {P}(X)\), the push-forward measure through w is the measure \(\mu \circ w^{-1}\) on Y by adjusting to each Borel set \(A\subset Y\), the value

The following lemma lists basic properties of push-forward measure. The proof can be found in [3] or [8]:

Lemma 2.10

In the above frame, for every measures \(\mu ,\mu _1,\ldots ,\mu _n\in \mathcal {P}(X)\) and \(p_1,\ldots ,p_n>0\) with \(p_1+\cdots +p_n=1\), we have

-

(i)

\(\mu \circ w^{-1}\in \mathcal {P}(Y)\);

-

(ii)

\({\text {supp}}(\mu \circ w^{-1})=w({\text {supp}}(\mu ))\);

-

(iii)

for a continuous map \(g:Y\rightarrow \mathbb {R}\), it holds \(\int _Yg\;d(\mu \circ w^{-1})=\int _Xg\circ w\;d\mu \);

-

(iv)

\((p_1\cdot \mu _1+\cdots +p_n\mu _n)\circ w^{-1}=p_1\cdot (\mu _1\circ w^{-1})+\cdots +p_n\cdot (\mu _n\circ w^{-1})\).

Assume additionally that \(X_1,X_2,X_3,\ldots \) are metric spaces, \(\mu _i\in \mathcal {P}(X_i)\) for \(i=1,2,\ldots \) and let \(m\in \mathbb {N}\). Then by \(\mu _1\times \cdots \times \mu _m\) and \(\mu _1\times \mu _2\times \ldots \) we denote the product measures of \(\mu _1,\ldots ,\mu _m\) on \(X_1\times \cdots \times X_m\), and of \(\mu _1\), \(\mu _2\),..., on \(X_1\times X_2\times \ldots \), respectively. They are unique measures in \(\mathcal {P}(X_1\times \cdots \times X_m)\) and \(\mathcal {P}(X_1\times X_2\times \ldots )\), respectively, so that for any Borel sets \(A_i\subset X_i\), \(i=1,\ldots ,m\), it holds

and for every \(k\in \mathbb {N}\) and any Borel sets \(A_1\subset X_1\),..., \(A_k\subset X_k\), we have

We will also use the important Fubini theorem (we state here a version that we will use later): if \(f:X_1\times \cdots \times X_m\rightarrow \mathbb {R}\) is \(\mu _1\times \cdots \times \mu _m\)-integrable (in particular, if f is continuous—recall that our measures have compact supports), then for every enumeration \(k_1,\ldots ,k_l,k_{l+1},\ldots ,k_m\) of the set \(\{1,\ldots ,m\}\), it holds

2.4 Hutchinson measure for GIFSs

Definition 2.11

By a probabilistic GIFS (pGIFS for short) we will mean a pair \((\mathcal {F},\vec {p})\), where \(\mathcal {F}=\{f_1,\ldots ,f_n\}\) is a GIFS and \(\vec {p}=(p_1,\ldots ,p_n)\) is a probability vector, which means that \(p_1,\ldots ,p_n>0\) and \(p_1+\cdots +p_n=1\).

If \((\mathcal {F},\vec {p})\) is a probabilistic GIFS, then for every \(\mu _1,\ldots ,\mu _m\in \mathcal {P}(X)\), define

The map \(M_{(\mathcal {F},\vec {p})}:\mathcal {P}(X)\times \cdots \times \mathcal {P}(X)\rightarrow \mathcal {P}(X)\) will be called the generalized Markov operator for \((\mathcal {F},\vec {p})\).

By Lemma 2.10 and basic properties of integrals, we see that for every continuous \(g:X\rightarrow \mathbb {R}\), it holds

Miculescu and Mihail in [14] proved that a certain class of probabilistic GIFSs admit counterparts of the so-called Hutchinson measures, that is, measures invariant w.r.t. the generalized Markov operators. By \({\text {Lip}}_{a,b}(X^2,X)\) let us denote the family of maps \(f:X^2\rightarrow X\) so that

Clearly, for \(f\in {\text {Lip}}_{a,b}(X^2,X)\), we have \({\text {Lip}}(f)\le a+b\).

Theorem 2.12

[14, Theorem 3.6] Fix \(a,b\ge 0\) so that \(a+b<1\) and assume that \((\mathcal {F},\vec {p})\) is a probabilistic GIFS of order 2 on a complete space X such that each \(f\in \mathcal {F}\) belongs to \({\text {Lip}}_{a,b}(X^2,X)\).

-

(i)

There is the unique measure \(\mu _{(\mathcal {F},\vec {p})}\in \mathcal {P}(X)\) such that

$$\begin{aligned} M_{(\mathcal {F},\vec {p})}(\mu _{(\mathcal {F},\vec {p})},\mu _{(\mathcal {F},\vec {p})})=\mu _{(\mathcal {F},\vec {p})}. \end{aligned}$$ -

(ii)

For every measures \(\mu _1,\mu _2\in \mathcal {P}(X)\), the sequence \((\mu _k)\) defined by \(\mu _{k+2}:=M_{(\mathcal {F},\vec {p})}(\mu _k,\mu _{k+1})\) for \(k\ge 1\), converges to \(\mu _{(\mathcal {F},\vec {p})}\) w.r.t. the Monge–Kantorovich metric.

-

(iii)

\({\text {supp}}(\mu _{(\mathcal {F},\vec {p})})=A_\mathcal {F}\), where \(A_\mathcal {F}\) is the attractor of \(\mathcal {F}\).

Definition 2.13

The unique measure \(\mu _{(\mathcal {F},\vec {p})}\) for a probabilistic GIFS \((\mathcal {F},\vec {p})\) which satisfies the thesis of the above theorem will be called a generalized Hutchinson measure for \((\mathcal {F},\vec {p})\).

In the proof of the above theorem there is shown that the generalized Markov operator \(M_{(\mathcal {F},\vec {p})}\in {\text {Lip}}_{a,b}(\mathcal {P}(X)^2,\mathcal {P}(X))\) provided that the elements of \(\mathcal {F}\) belong to \({\text {Lip}}_{a,b}(X^2,X)\). The proof can be extended to arbitrary m. On the other hand, as the next example shows, there are GIFSs consisting of contractive maps whose generalized Markov operator is not contractive.

Example 2.14

Let \(f,g:[0,1]^2\rightarrow [0,1]\) be given by

It is easy to see that \({\text {Lip}}(f)={\text {Lip}}(g)=\frac{2}{3}\) (see [21]). Now if \(a,b\ge 0\) are such that

then we have

and similarly \(b\ge \frac{2}{3}\). Thus \(a+b\ge \frac{4}{3}\). Now we will show that \(M_{(\mathcal {F},\vec {p})}\) is not a generalized Matkowski contraction, where \(\mathcal {F}=\{f,g\}\) and \(\vec {p}=(p_1,p_2)\) is any probability vector.

Define \(\mu _1=\mu _2=\delta _{0}\), \(\nu _1=\delta _{\frac{1}{2}}\), \(\nu _2=\frac{1}{2}\delta _{0}+\frac{1}{2}\delta _1\) (where \(\delta _x\) is the Dirac measure supported on x). At first we observe that \(d_{MK}(\mu _1,\nu _1)=d_{MK}(\mu _2,\nu _2)=\frac{1}{2}\).

Choose any nonexpansive map \(h:[0,1]\rightarrow \mathbb {R}\). Then

On the other hand, if \(h(x)=x\) for \(x\in [0,1]\), then in the above computations all inequalities became equalities. All in all, we get \(d_{MK}(\mu _1,\nu _1)=d_{MK}(\mu _2,\nu _2)=\frac{1}{2}\).

Now we observe that \(M_{(\mathcal {F},\vec {p})}(\mu _1,\mu _2)=p_1\delta _{0}+p_2\delta _{\frac{1}{3}}\). Indeed, we have:

Similarly,

Finally, setting \(h(x)=x\) for \(x\in [0,1]\), we have

All in all, \(M_{(\mathcal {F},\vec {p})}\) is not a generalized Matkowski contraction.

Nevertheless, using completely different approach, in the main result of the paper we will prove that the assertion of Theorem 2.12 holds for all probabilistic GIFSs consisting of generalized Matkowski contractions.

3 Further notations and definitions

Our first aim is to get a full description of iterations of generalized Markov operator. We will need more delicate constructions than those of \({}_k\Omega \), \(\Omega _k\), \(\mathcal {F}_k\), \(X_k\) etc.

At first, set \(m\in \mathbb {N}\) and define \(\Gamma _k\), \({}_k\Gamma \), \(k\in \mathbb {N}\), and \(\Gamma \) according to (1), (2) and (3), but for the initial set \(\Gamma _1:=\{0,1\}\). Now, we will define particular sequences \((\gamma ^k)\subset \Gamma \). The definition will be inductive:

where for every \(\beta ^1=(\beta ^1_1,\beta ^1_2,\ldots ),\ldots ,\beta ^m=(\beta ^m_1,\beta ^m_2,\ldots )\), we put

Then for every \(k\in \mathbb {N}\), put \(c^k:=\lceil \frac{k}{m}\rceil \) (where \(\lceil a\rceil \) is the ceiling of a). Clearly, \(c^1=\cdots =c^m=1\) and \(c^{k}=\min \{c^{k-m},\ldots ,c^{k-1}\}+1\) for \(k\ge m+1\).

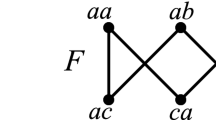

To understand the above definitions better, let’s see how they work for \(m=2\) and \(m=3\):

Example 3.1

Assume that \(m=2\). Then:

and for \(m=3\),

The above suggests that the value \(c^k\) is the biggest natural number so that the first \(c^k\) coordinates of \(\gamma ^{k+m}\) “consists only of 1’s”. We state this observation as a lemma.

Lemma 3.2

For every \(k\in \mathbb {N}\), \(\gamma ^{k+m}_{\vert c^k}=(1,(1,\ldots ,1),\ldots ,((\ldots ((1,\ldots ,1),\ldots (1,\ldots ,1))\ldots )))\).

Proof

First extend the definition of \(\tau \) for finite sequences. That is, for every \(j\ge 1\) and \(\beta ^1=(\beta _1^1,\ldots ,\beta ^1_j),\ldots ,\beta ^m=(\beta ^m_1,\ldots ,\beta ^m_j)\in {}_j\Gamma \), set

Clearly, for every \(\beta ^1,\ldots ,\beta ^m\in \Gamma \) and every \(j\in \mathbb {N}\),

Now observe that the assertion of lemma holds for \(k=1,\ldots ,m\) directly by definition. Assume that it is the case for all \(i\le k\), for some \(k\ge m\). If \(c^{k+1}=c^k\), then by (5),

Hence, by the inductive assumption and a fact that \(c^{k}-1=\min \{c^k,\ldots ,c^{k-m+1}\}\), we get the assertion.

Now if \(c^{k+1}=c^k+1\), then by (5),

Hence, by the inductive assumption and a fact that \(c^{k}=c^{k-1}=\cdots =c^{k-m+1}\}\), we also get the assertion. \(\square \)

Now fix, additionally, a natural number \(n\in \mathbb {N}\) and let \(\tilde{\Omega }\) be the code space defined as earlier, but for starting set \(\tilde{\Omega }_0=\{0,1,\ldots ,n\}\). For every \(k\in \mathbb {N}\), we will define a certain family \([\gamma ^k]\subset \tilde{\Omega }\). The definition will be, as usual, inductive:

Roughly speaking, \([\gamma ^k]\) consists of those sequences from \(\tilde{\Omega }\) which has zeros exactly in the same places as \(\gamma ^k\).

Now fix a probability vector \(\vec {p}=(p_1,\ldots ,p_n)\). For every \(k\in \mathbb {N}\) and every \(\alpha \in [\gamma ^k]\) we will define \(p_\alpha \):

Note that we can rewrite the second part in the following way:

The next lemma shows that sums of \(p_\alpha \)’s among each \([\gamma ^k]\) are equal to 1.

Lemma 3.3

In the above frame, for every \(k\in \mathbb {N}\), \(\sum _{\alpha \in [\gamma ^{k}]}p_\alpha =1\).

Proof

For \(k=1,\ldots ,m\), the assertion follows directly from definition. Now assume that it is the case for all \(i< m+k\), for some \(k\ge 1\). Then

\(\square \)

Now, for a set (or metric space) X and \(k\in \mathbb {N}\), we will define the set (or a metric space) \(\tilde{X}_k\):

Finally, for a GIFS \(\mathcal {F}=\{f_1,\ldots ,f_n\}\) on a metric space X of order m, we will define families \(\tilde{\mathcal {F}}_k=\{\tilde{f}_\alpha :\tilde{X}_k\rightarrow X:\alpha \in [\gamma ^k]\}\), \(k\in \mathbb {N}\) of certain maps. First we define \(\tilde{\mathcal {F}}_1=\cdots =\tilde{\mathcal {F}}_m=\{{\text {Id}}_X\}\), where \({\text {Id}}_X\) is the identity function. Assume that we have already defined \(\tilde{\mathcal {F}}_{i}=\{\tilde{f}_\alpha :\tilde{X}_i\rightarrow X:\alpha \in [\gamma ^i]\}\) for all \(i< k+m\) for some \(k\ge 1\). Then for every \(\alpha =(\alpha _1,\alpha _2,\ldots )\in [\gamma ^{k+m}]\) and every \(x=(x_1,\ldots ,x_m)\in \tilde{X}_{k+m}=\tilde{X}_{k}\times \cdots \times \tilde{X}_{k+m-1}\), we define

Finally, we put \(\tilde{\mathcal {F}}_{k+m}:=\{\tilde{f}_\alpha :\alpha \in [\gamma ^{k+m}]\}\).

The following result shows a relationship between elements of \(\mathcal {F}_k\) and \(\tilde{\mathcal {F}}_k\) (recall notions from Sect. 2.2).

Lemma 3.4

In the above frame, assume that \(D\subset X\) satisfies \(\mathcal {F}(D,\ldots ,D)\subset D\). Then for every \(k\in \mathbb {N}\) and \(\alpha \in [\gamma ^{k+m}]\),

Proof

First let \(k=1\) and \(\alpha =(\alpha _i)\in [\gamma ^{1+m}]\). Then \(\alpha =(\alpha _1,(0,\ldots ,0),\ldots )\), \(c^{1}=1\) and hence

Now assume that the inclusion holds for all \(1\le i\le k\) for some \(k<m\) and let \(\alpha =(\alpha _i)\in [\gamma ^{k+1+m}]\). Then \(\alpha =(\alpha _1,(0,\ldots ,0,\alpha _2^{m-k+1},\ldots ,\alpha _2^m),\ldots )\), \(c^{1}=\cdots =c^{k+1}=1\) and hence

Now assume that the inclusion holds for all \(1\le i\le k\) for some \(k\ge m\), and let \(\alpha =(\alpha _i)\in [\gamma ^{k+1+m}]\). Then, setting \(c:=\min \{c^{k-m+1},\ldots ,c^{k}\}\), we have

This ends the inductive proof. \(\square \)

Now, for a fixed probability Borel measures \(\mu _1,\ldots ,\mu _m\in \mathcal {P}(X)\), define the sequence of probability Borel measures \(\tilde{\mu }_1,\tilde{\mu }_2,\ldots \) on \(\tilde{X}_1,\tilde{X}_2,\ldots \), respectively, according to the inductive formula:

At the end of this section we observe that the sequence \((\tilde{\mu }_k)\) remains zero outside the supports of \(\mu _i\hbox {s}\).

Lemma 3.5

In the above frame, let C be a Borel set so that \({\text {supp}}(\mu _1)\cup \cdots \cup {\text {supp}}(\mu _m)\subset C\). Then for every \(k\ge 1\), \(\tilde{\mu }_{k+m}(\tilde{C}_{k+m})=1\).

Proof

For \(k=1\) we have:

and if the assetion holds for \(k\ge 1\), then

\(\square \)

4 Main results

4.1 Invariant measure on the code space

We use all notations from previous sections (in particular, we fix \(n,m\in \mathbb {N}\)). Additionally, let \(\vec {p}=(p_1,\ldots ,p_n)\) be a probability map vector. We start by defining a particular sequence of measures \(\mu _k\), \(k\in \mathbb {N}\). First, let \(\mu _1\) be the measure on \(\Omega _1\) defined by \(\mu _1(\{i\}):=p_i\) for \(i=1,\ldots ,n\). Assume that we defined \(\mu _k\) on \(\Omega _k\) for some \(k\in \mathbb {N}\). Then let

e.g., \(\mu _{k+1}\) is the product measure on \(\Omega _{k+1}\). Finally, let

be the product measure on \(\Omega \). Then \(\mu _{(\Omega ,\vec {p})}\) is a probability Borel measure (we consider the metric d on \(\Omega \)). Next we observe that \(\mu _{(\Omega ,\vec {p})}\) is invariant w.r.t. the canonical GIFS \(\mathcal {T}\) on \(\Omega \).

Theorem 4.1

In the above frame, the measure \(\mu _{(\Omega ,\vec {p})}\) is \(M_{(\mathcal {T},\vec {p})}\) - invariant, that is

Proof

For any \(k\in \mathbb {N}\) and a sequence \(\alpha \in {}_k\Omega \), let \(B_\alpha :=\{\beta \in \Omega :\beta _{\vert k}= \alpha \}\). We first show that (7) holds for sets \(B_\alpha \). Hence let \(\alpha =(\alpha _1,(\alpha _2^1,\ldots ,\alpha _2^m),\ldots ,(\alpha _k^1,\ldots ,\alpha _k^m))\in {}_k\Omega \). At first observe that if \(i\ne \alpha _1\), then \(\tau _i^{-1}(B_\alpha )=\emptyset \). On the other hand, for \(i=\alpha _1\), we have

Hence we have:

Now, as each cylinder \(A_1\times \cdots \times A_k\times \Omega _{k+1}\times \cdots \) is a disjoint union of finitely many sets of the form \(B_\alpha \), we automatically get the equality (7) for such cylinderes. Hence we also reach the full assertion. \(\square \)

4.2 Description of iterations of the Markov operator for GIFSs

In the main result of this section we give a full description of iterations of Markov operators for GIFSs. We use the notations from earlier sections.

Theorem 4.2

Assume that \((\mathcal {F},\vec {p})\) is a probabilistic GIFS on a metric space X and \(\mu _1,\ldots ,\mu _m\in \mathcal {P}(X)\). Define \((\mu _k)\) according to \(\mu _{k+m}:=M_{(\mathcal {F},\vec {p})}(\mu _k,\ldots ,\mu _{k+m-1})\), \(k\in \mathbb {N}\). Then for every \(k\in \mathbb {N}\) and a continuous and bounded \(g:X\rightarrow \mathbb {R}\), it holds:

Proof

For simplicity, write M instead of \(M_{(\mathcal {F},\vec {p})}\).

For \(k=1,\ldots ,m\), the desired equality follows directly from definitions. For \(k=1+m\), by (4), we have

Now assume that the assertion holds for all \(1\le j\le k+m\) for some \(k<m\). Then, using the Fubini theorem, we have

Now assume that the assertion holds for all \(i\le k+m\) for some \(k\ge m\). Following similar lines as above, we get:

(in fact, the above reasoning is also valid for the earlier case, but for the sake of clarification we consider these two cases separately). \(\square \)

4.3 Hutchinson measure for probabilistic GIFSs

We state here and prove the main result of the paper. It says that a probabilistic GIFS consisting of generalized Matkowski contractions on a complete metric space generates the Hutchinson measure.

Theorem 4.3

Assume that \((\mathcal {F},\vec {p})\) is a probabilistic GIFS of order m on a complete metric space X and let \(M_{(\mathcal {F},\vec {p})}:\mathcal {P}(X)\times \cdots \times \mathcal {P}(X)\rightarrow \mathcal {P}(X)\) be the corresponding generalized Markov operator. Define

where \(\Omega \) is the code space for \(\mathcal {F}\).

Then \(\mu _{(\mathcal {F},\vec {p})}\) is the unique Hutchinson measure for \((\mathcal {F},\vec {p})\). In other words,

-

(i)

\(\mu _{(\mathcal {F},\vec {p})}\) is the unique measure which satisfies

$$\begin{aligned} M_{(\mathcal {F},\vec {p})}(\mu _{(\mathcal {F},\vec {p})},\ldots ,\mu _{(\mathcal {F},\vec {p})})=\mu _{(\mathcal {F},\vec {p})} \end{aligned}$$(8) -

(ii)

for every measures \(\mu _1,\ldots ,\mu _m\in \mathcal {P}(X)\), the sequence \((\mu _k)\) defined by \(\mu _{k+m}:=M_{(\mathcal {F},\vec {p})}(\mu _k,\ldots ,\mu _{k+m-1})\) for \(k\ge 1\), converges to \(\mu _{(\mathcal {F},\vec {p})}\) with respect to the Monge–Kantorovich metric;

-

(iii)

\({\text {supp}}(\mu _{(\mathcal {F},\vec {p})})=A_\mathcal {F}\).

Proof

For simplicity, we will write \(\mu _*\) instead of \(\mu _{(\mathcal {F},\vec {p})}\), \(\mu \) instead of \(\mu _{(\Omega ,\vec {p})}\) and M instead of \(M_{(\mathcal {F},\vec {p})}\).

In view of Theorem 2.9(iii), Theorem 4.1 and the simple fact that \((\mu \times \cdots \times \mu )\circ \pi _1^{-1}=(\mu \circ \pi ^{-1})\times \cdots \times (\mu \circ \pi ^{-1})\), we get for every Borel set \(B\subset X\),

Hence we proved that the measure \(\mu _*\) satisfies (8). Its uniqueness will follow from part (ii), which we will show later.

By Lemma 2.10(ii) and Theorem 2.9(iii), we easily see that \({\text {supp}}(\mu _*)=A_\mathcal {F}\), which gives us (iii). Hence it remains to show (ii).

By Lemma 2.10(ii) and Theorem 2.9(iii), we easily see that \({\text {supp}}(\mu _*)=A_\mathcal {F}\), which gives us (iii). It remains to show (ii).

It is enough to prove that for every measures \(\mu _1,\ldots ,\mu _m,\nu _1,\ldots ,\nu _m\in \mathcal {P}(X)\) and \(\varepsilon >0\), there is \(k_0\in \mathbb {N}\) such that for \(k\ge k_0\) and a function \(g:X\rightarrow \mathbb {R}\) with \({\text {Lip}}(g)\le 1\), it holds

Indeed, taking \(\nu _1=\cdots =\nu _m=\mu _*\) and using point (i), the above shows that \(d_{MK}(\mu _k,\mu _*)\rightarrow 0\).

Take any \(\varepsilon >0\) and define

By Theorem 2.9(i), we can find closed and bounded set \(D\supset C\) so that \(\mathcal {F}(D,\ldots ,D)\subset D\). Now for \(j\in \mathbb {N}\), put

Clearly, each \(A_j\) is open and by Theorem 2.9(ii), the family \(A_j\), \(j\in \mathbb {N}\) is a cover of \(\Omega \). By compactness of \(\Omega \) and the fact that the sequence \((A_j)\) is increasing (which again follows from Theorem 2.9(ii)), there is \(j_0\in \mathbb {N}\) such that \(\Omega =A_{j_0}\). Now let \(k_0\) be such that \(c^{k_0}\ge j_0\). Then, in wiev of Lemma 3.4, for every \(k\ge k_0\) and \(\alpha \in [\gamma ^{k+m}]\),

Hence if \(x_0\) is any element of \(\tilde{f}_\alpha (\tilde{D}_{k+m})\), then for every \(g:X\rightarrow \mathbb {R}\) with \({\text {Lip}}(g)\le 1\) and every \(x\in \tilde{D}_{k+m}\), we have

Now choose any \(\alpha \in [\gamma ^{k+m}]\). Taking any \(x_0\in \tilde{f}_\alpha (\tilde{D}_{k+m})\), we have

Note that in the first inequality in the above line we used Lemma 3.5. Finally, using the above, Theorem 4.2 and Lemma 3.3, for every \(k\ge k_0\), we have

\(\square \)

Remark 4.4

Note that in the above reasoning, we can give a simpler proof that \(\Omega =A_j\) for some j. Indeed, taking an appropriate function \(\varphi \) (see definition of a Matkowski contraction), for every \(\alpha \in \Omega \) and \(j\in \mathbb {N}\), we have

Hence there exists j so that for every \(\alpha \in \Omega \), \({\text {diam}}(f_{\alpha _{\vert _j}}(D_j))\le \frac{\varepsilon }{2}\). However, as we will see later in Sect. 5.1, the presented reasoning will be also used when considering a bit different class of GIFSs.

5 Further remarks, related results and open questions

5.1 Generalized Hutchinson measure for topologically contracting pGIFSs

Here we show that the proof of Theorem 4.3 proves also a bit different version of it.

Motivating by the notion of a topologically contracting IFS (TIFS for short, see [1] and [18]), in [4] we defined and studied the GIFSs’ counterpart of this setting. Let us recall its particular version (we restrict here to finite case, see [4, Definition 4.1 and Remark 4.7]).

Definition 5.1

Let X be a Hausdorff topological space, \(m\in \mathbb {N}\) and \(\mathcal {F}=\{f_1,\ldots ,f_n\}\) be a finite family of maps defined on \(X^m\) and with values in X. We say that \(\mathcal {F}\) is a topologically contracting GIFS of order m (TGIFS for short), if the following hold:

-

(i)

for every set \(K\in \mathbb {K}(X)\), there is \(D\in \mathbb {K}(X)\) so that \(K\subset D\) and \(\mathcal {F}(D,\ldots ,D)\subset D\);

-

(ii)

for every set \(D\in \mathbb {K}(X)\) with \(\mathcal {F}(D,\ldots ,D)\subset D\) and \(\alpha \in \Omega \), the set \(\bigcap _{k\in \mathbb {N}}f_{\alpha _{\vert k}}(D_k)\) is a singleton.

Roughly speaking, TGIFSs are GIFSs on topological spaces so that the assertion Theorem 2.9(iib) for compact sets and strengthening of Theorem 2.9(i) hold. As was proved in [4, Theorem 4.14 and Proposition 5.2], TGIFSs satisfy also (iii) from Theorem 2.9 and also the assertion of Theorem 2.4 (where the convergence w.r.t. the Hausdorff–Pompeiu metric is replaced by the convergence w.r.t. the Vietoris topology). In particular, TGIFSs generate attractors. Also, in [4, Proposition 4.11] we proved that GIFSs consisting of generalized Matkowski contractions on compact spaces or Euclidean spaces are topologically contracting.

Following almost the same lines as in the proof of Theorem 4.3, we can get its version for TGIFSs on metric spaces.

Theorem 5.2

Assume that \((\mathcal {F},\vec {p})\) is a probabilistic TGIFS of order m on a metric space X and let \(M_{(\mathcal {F},\vec {p})}:\mathcal {P}(X)\times \cdots \times \mathcal {P}(X)\rightarrow \mathcal {P}(X)\) be the corresponding generalized Markov operator. Define

where \(\Omega \) is the code space for \(\mathcal {F}\).

Then \(\mu _{(\mathcal {F},\vec {p})}\) is the unique Hutchinson measure for \((\mathcal {F},\vec {p})\).

The only difference in the proof is that the family \(A_j\), \(j\in \mathbb {N}\), is a cover of \(\Omega \). Choose \(\alpha =(\alpha _k)\in \Omega \). Then, by definition of a GIFS, the intersection \(\bigcap _{j\in \mathbb {N}}f_{\vert _{\alpha _j}}(D_j)\) is singleton (as here we choose D to be compact). Since the sequence \((f_{\alpha _{\vert _j}}(D_j))\) is decreasing and consists of compact sets, we can easily show that \({\text {diam}}\left( f_{\alpha _{\vert _j}}(D_j)\right) \rightarrow 0\), and hence \(\alpha \in A_j\) for some \(j\in \mathbb {N}\).

5.2 Open questions and problems

In the paper we restricted to most natural GIFSs’ setting, e.g., for probabilistic GIFSs consisting of finitely many maps, defined on metric spaces and consisting of generalized Matkowski contractions or topologically contracting. In the literature, there have been discussed also wider classes of GIFSs.

Miculescu in [12] extended Theorem 2.12 for GIFSs with place dependent probabilities. The difference between standard probabilistic GIFSs is that we assume that probabilities \(p_1,\ldots ,p_n\) are real functions on \(X^m\) so that \(p_1(x)+\cdots +p_n(x)=1\) for every \(x\in X^m\). Making additional contractive assumptions (both on maps from a GIFS and probabilities, analogous to those from Theorem 2.12), Miculescu proved the existence of the Hutchinson measure for such GIFSs. Hence the problem arises

Problem 5.3

Can Theorem 4.3 (and Theorem 5.2) be extended to GIFSs with place dependent probabilities?

Note that it is not clear if we can use our methods without some additional detailing—for example, invariant measure on the code space depends strongly on constant numbers \(p_1,\ldots ,p_n\).

Secelean in [20] extended Theorem 2.12 for GIFSs consisting of infinite number of maps. Hence we can ask:

Problem 5.4

Can Theorem 4.3 (and Theorem 5.2) be extended to GIFSs consisting of infinite number of maps?

It seem that this can be the case—for example, the code space for such GIFSs were investigated in [4]. However, the technicalities can be harder.

As we saw, topologically contracting GIFSs are defined for topological spaces which are not necessarily metrizable. It is known, that the Hutchinson measure exists for any probabilistic topologically contracting IFS (see, e.g., [8]). However, in such a general framework, we have to restrict to special families of Borel measures (Radon measures) and replace the convergence w.r.t. the Monge–Kantorovich metric by weak convergence. Hence the problem arises:

Problem 5.5

Can Theorem 5.2 be extended to TGIFSs on Hausdorff topological spaces (consisting of infinitely many maps)?

It seem that this can be the case, but, probably under some mild additional assumptions related with problems with properties of measures in nonmetrizable spaces. On the other hand, even if the underlying space X is nonmetrizable, then the attractor of a topologically contracting GIFS is always metrizable. Hence, looking just on the Hutchinson measure, the “metric” case seems to be sufficient.

In recent papers [9, 21], the theory of GIFSs was extended to “infinite order”, that is, to maps defined on spaces of bounded sequences of elements of a given space X. In particular, in [9] we defined and studied the code space for such kind of GIFSs and proved counterparts of Theorems 2.4 and 2.9. Hence the question arises:

Problem 5.6

Can Theorem 4.3 be extended to GIFSs of infinite order?

Again, we believe that our methods should work, but technicalities can be much much harder.

References

Banakh, T., Kubiś, W., Nowak, M., Novosad, N., Strobin, F.: Contractive function systems, their attractors and metrization. Topol. Methods Nonlinear Anal. 46(2), 1029–1066 (2015)

Barnsley, M.F.: Fractals Everywhere. Academic Press Professional, Boston (1993)

Bogachev, V.: Measure Theory. Springer, Berlin (2007)

Dumitru, D., Ioana, L., Sfetcu, R.C., Strobin, F.: Topological version of generalized (infinite) iterated function systems. Chaos Solitons Fractals 71, 78–90 (2015)

Hutchinson, J.: Fractals and self-similarity. Indiana Univ. Math. J. 30(5), 713–747 (1981)

Jachymski, J., Jóźwik, I.: Nonlinear contractive conditions: a comparison and related problems. Polish Acad. Sci. 77, 123–146 (2007)

Kunze, H., La Torre, D., Mendivil, F., Vrscay, E.R.: Fractal-Based Methods in Analysis. Springer, Berlin (2012)

Leśniak, K., Snigireva, N., Strobin, F.: Weakly contractive iterated function systems and beyond: a manual. J. Differ. Equ. Appl. https://doi.org/10.1080/10236198.2020.1760258 available at arXive: arXiv:2004.11057

Maślanka, Ł., Strobin, F.: On generalized iterated function systems defined on \(\ell _\infty \)-sum of a metric space. J. Math. Anal. Appl. 461(2), 1795–1832 (2018)

Maślanka, Ł., Strobin, F.: Zero-dimensional compact metrizable spaces as attractors of generalized iterated function systems. Topol. Methods Nonlinear Anal. 53(1), 363–403 (2019)

Matkowski, J.: Integrable solutions of functional equations. Diss. Math. 127, 68 (1975)

Miculescu, R.: Generalized iterated function systems with place dependent probabilities. Acta Appl. Math. 130(1), 135–150 (2014)

Miculescu, R., Mihail, A.: Applications of fixed point theorems in the theory of generalized IFS. Fixed Point Theory Appl. 2008, Article ID 312876. https://doi.org/10.1155/2008/312876

Miculescu, R., Mihail, A.: A Generalization of the Hutchinson Measure. Mediterr. J. Math. 6, 203–213 (2009)

Miculescu, R., Mihail, A.: Generalized IFSs on noncompact spaces. Fixed Point Theory Appl. Volume 2010, Article ID 584215, https://doi.org/10.1155/2010/584215

Mihail, A.: Recurrent iterated function systems. Rev. Roumaine Math. Pures Appl. 53(1), 43–53 (2008)

Mihail, A.: The shift space for recurrent iterated function systems. Rev. Roumaine. Math. Pures Appl. 4, 339–355 (2008)

Mihail, A.: A topological version of iterated function systems. An. Ştiinţ. Univ. Al. I. Cuza, Iaşi, (S.N.), Matematica 58, 105–120 (2012)

Oliveira, E.: The ergodic theorem for a new kind of attractor of a GIFS. Chaos Solitons Fractals 98, 63–71 (2017)

Secelean, N.: Invariant measure associated with a generalized countable iterated function system. Mediterr. J. Math. 11, 361–372 (2014)

Secelean, N.: Generalized iterated function systems on the space \(l^\infty (X)\). J. Math. Anal. Appl. 410(2), 847–858 (2014)

Strobin, F.: Attractors of GIFSs that are not attractors of IFSs. J. Math. Anal. Appl. 422(1), 99–108 (2015)

Strobin, F., Swaczyna, J.: On a certain generalisation of the iterated function system. Bull. Aust. Math. Soc. 87(1), 37–54 (2013)

Strobin, F., Swaczyna, J.: A code space for a generalized IFS. Fixed Point Theory 17(2), 477–494 (2016)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Strobin, F. On the existence of the Hutchinson measure for generalized iterated function systems. Qual. Theory Dyn. Syst. 19, 85 (2020). https://doi.org/10.1007/s12346-020-00420-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12346-020-00420-2