Abstract

We propose and analyse a mathematical model for infectious disease dynamics with a discontinuous control function, where the control is activated with some time lag after the density of the infected population reaches a threshold. The model is mathematically formulated as a delayed relay system, and the dynamics is determined by the switching between two vector fields (the so-called free and control systems) with a time delay with respect to a switching manifold. First we establish the usual threshold dynamics: when the basic reproduction number \(\,{\mathcal {R}}_0\le 1\), then the disease will be eradicated, while for \(\,{\mathcal {R}}_0>1\) the disease persists in the population. Then, for \(\,{\mathcal {R}}_0>1\), we divide the parameter domain into three regions, and prove results about the global dynamics of the switching system for each case: we find conditions for the global convergence to the endemic equilibrium of the free system, for the global convergence to the endemic equilibrium of the control system, and for the existence of periodic solutions that oscillate between the two sides of the switching manifold. The proof of the latter result is based on the construction of a suitable return map on a subset of the infinite dimensional phase space. Our results provide insight into disease management, by exploring the effect of the interplay of the control efficacy, the triggering threshold and the delay in implementation.

Similar content being viewed by others

1 Introduction

Switching models have been used recently in the compartmental models of mathematical epidemiology to analyze the impact of control measures on the disease dynamics. For example, it has been observed that if the treatment rate [11] or the incidence function [1] is non-smooth, that may lead to various bifurcations. These sharp changes occur in [1, 11] when the total population, or the infected population reaches a threshold level. Such a sudden change may even be discontinuous, for example due to the implementation or termination of an intervention policy such as vaccination or school closures.

Mathematically, such situations are described by Filippov systems, when the phase space is divided into two (or more) parts and the system is given by different vector fields in each of those parts. Examples include sudden changes in vaccination [8, 12], hospitalization [15], transmission [13], travel patterns [6], or the combination of several effects [14]. They have been used for vector borne diseases as well [16]. An overview of the basic theory and applications of switching epidemiological models can be found in [7]. Many of the mathematical challenges appear due to the incompatible behaviours of the vector fields at their interfaces, on the so-called switching manifold.

Switching systems typically assume that the change in the vector field occurs immediately whenever the switching manifold is touched, for example, a threshold in a population variable is reached. However, in reality, implementing a policy may have some time lag, hence it is natural to consider the situation when we switch to the new vector field with some delay after the trajectory intersected the switching manifold. These systems are called delayed relay systems [9], and they are of different mathematical nature than the Filippov systems.

Delayed relay systems have been applied to an SIS model [5], where explicit periodic solutions were constructed for the case of a delayed reduction in the contact rate after the density of infection in the population passed through a threshold value. The dynamics of this discontinuous system was different from its continuous counterpart [3, 4], showing that it is worthwhile to analyse the dynamics of epidemiological systems with delayed switching. The simplistic SIS model of [5] could be reduced to a scalar equation, and here we initiate the study of more realistic and more complex compartmental models in this context.

In particular, in this paper our starting point is an SIR model with switching, which has been thoroughly investigated in Xiao et al. [13]. The model represents circumstances when intervention measures are taken only when the density of infectious individuals is exceeding a certain threshold value. This is expressed by a discontinuous incidence rate, more precisely, the intervention causes a drop in the transmission rate. They showed that the solutions ultimately approach one of the two endemic states of the two structures (the free and the control system), or the so-called sliding equilibrium located on the switching surface, depending on the threshold level.

Here we introduce the possibility of a time delay in the threshold policy. We prove several global stability theorems for the system with delay. An important difference in the dynamics is that while in the model of [13] the existence of limit cycles was excluded, for our model periodic orbits exist, and we prove that by constructing a Poincaré-type return map on a special subset of the phase space. These periodic solutions oscillate around the threshold level. On the other hand, the sliding mode control in [13] does not appear in our system. Our results contribute to the development of a systematic way of designing simply implementable controls that drive the dynamics towards disease control or mitigation.

2 Model Description

The population N is divided into three compartments: susceptible (S), infected (I) and recovered (R). All individuals are born susceptible, and the birth rate is \(\mu >0\) for each compartment. The death rate is also \(\mu \) for each class, and hence the total population \(N=S+I+R\) is constant, which we normalize to unity, \(N=1\). Although in classical SIR models with mass action incidence, the new infections occur with some constant transmission coefficient \(\beta >0\), here we assume that the transmission coefficient depends on the number of infected individuals: If the density of infected individuals reaches a threshold level \(k\in (0,1)\), then the society implements certain control measures, and thereby the transmission rate is reduced from \(\beta \) to \((1-u_*)\beta \) with \(u_*\in (0,1)\). The constant \(u_*\) represents the efforts and the efficacy of the control measures. It is reasonable to assume that this reduction takes place with a time delay \(\tau >0\). If the density of the infected individuals becomes less than k, then the control measures are stopped, again with delay \(\tau >0\). Infected individuals recover with rate \(\gamma >0\), and full lifelong immunity is assumed upon recovery. With these assumptions above, we obtain the following SIR model with delay:

where

\(k\in (0,1)\) and \(u_*\in (0,1) \).

In the special case \(\tau =0\) we obtain a model studied in [13].

A dynamical system is called a delayed relay system [9], if it is governed by a differential equation of the form

where \(\tau >0\), and \(f^1, f^2\) are Lipschitz continuous. The switching function g is typically a piecewise smooth Lipschitz continuous function. The set \( \{ x:g(x)=0 \} \) is called the switching manifold.

Let \((Sys_d)\) denote the system consisting of the first two equations of (1). We consider only these two equations as they are independent of the third one in (1). Note that \((Sys_d)\) is a delayed relay system with \(x=(S,I)\),

and \(g(S,I)=I-k\). Now the switching manifold is the set \(\{(S,I): I= k\}\).

Hereinafter \((Sys_f )\) denotes the free system

and \((Sys_c )\) is for the control system

Let us emphasize that these are 2-dimensional systems consisting of the S- and I-equations of a classical ordinary SIR model. The transmission rate is \(\beta \) for \((Sys_f )\), and it is \((1-u_*)\beta \) for \((Sys_c)\).

As it is well-known, the set

is positively invariant for both \((Sys_f)\) and \((Sys_c)\). For all \((S_0,I_0)\in \varDelta \) and for both \(*\in \{f,c\}\), let

denote the solution of \((Sys_*)\) with

Solution \((S_*,I_*)\) exists on the positive real line. It is also important that if \(I_0=0\), then \(I_*(t)=0\) for all \(t\ge 0\). Condition \(I_0>0\) guarantees that \(I_*\) remains positive on the positive real line. In other words,

is positively invariant w.r.t. both \((Sys_f)\) and \((Sys_c)\).

Because of the delay \(\tau \), the phase space for \((Sys_d)\) has to be chosen as

Given any \((S_0,\varphi )\in X\), the solution \((S,I)= (S(\cdot ;S_0,\varphi ),I(\cdot ;S_0,\varphi ))\) of \((Sys_d)\) is a pair of real functions with the following properties: S is defined and continuous on \([0,\infty )\) with \(S(0)=S_0\), I is defined and continuous on \([-\tau ,\infty )\) with \(I|_{[-\tau ,0]}=\varphi \), furthermore, (S, I) satisfies the integral equation system

for all \(t>0\).

It is obvious that the solutions of \((Sys_d)\) are absolutely continuous, and the first two equations in (1) are satisfied almost everywhere. Throughout the paper \( S'(t) \) and \( I'(t) \) will mean the right-hand derivative when \( I(t-\tau )=k \); this will not cause any confusion.

For all \(t\ge 0\), let \(I_t\) denote the element of \(C([-\tau ,0],[0,1])\) defined by \(I_t(\xi )=I(t+\xi )\), \(\xi \in [-\tau ,0]\).

Consider the following subset of X:

In this paper we only study solutions with initial data in \(X_0\). A further subset of X is

the collection of endemic states, when the disease is present in the population. We will show in Sect. 4 that both \(X_0\) and \(X_1\) are positively invariant for \((Sys_d)\).

3 Equilibria

In case of the free system \( (Sys_f )\), the basic reproduction number is

The reproduction number of the control system \( (Sys_c )\), what we call control reproduction number, is given by

Next we recall the equilibria and their stability properties for the ordinary systems \((Sys_f)\) and \((Sys_c)\).

The disease-free equilibrium for both \((Sys_f)\) and \((Sys_c)\) is

The endemic equilibrium for \((Sys_f)\) is

It exists only if \({\mathcal {R}}_{0}>1\).

It is known (see [2]) that \( E^*_0 \) is globally asymptotically stable w.r.t the free system \( (Sys_f )\) if \( {\mathcal {R}}_{0}\le 1\), and it is unstable if \( {\mathcal {R}}_{0}>1\). The endemic state \( E^*_1 \) is asymptotically stable w.r.t \( (Sys_f )\) if \( {\mathcal {R}}_{0}>1,\) and its region of attraction is \(\varDelta _1\).

The endemic equilibrium for \((Sys_c)\) is

It exists for \({\mathcal {R}}_{u_*}>1\).

\( E^*_2 \) is asymptotically stable w.r.t \( (Sys_c )\) and attracts \(\varDelta _1\) if \( {\mathcal {R}}_{u_*}>1.\) The disease free equilibrium \( E^*_0\) is globally asymptotically stable w.r.t \( (Sys_c )\) if \( {\mathcal {R}}_{u_*}\le 1\), and it is unstable if \( {\mathcal {R}}_{u_*}>1\).

Next we examine what are the equilibria for \((Sys_d)\).

For all \(I^{*}\in [0,1]\), let \(I^{*}\) also denote the constant function in \(C([-\tau ,0],[0,1])\) with value \(I^{*}\). This will not cause any confusion but ease the notation. If we write \((S^{*},I^{*})\in \varDelta \), then \(I^{*}\) is considered to be a real number in [0, 1]. Notation \((S^{*},I^{*})\in X_0\) means that \(I^{*}\) is an element of \(C([-\tau ,0],[0,1])\). In accordance, we may consider \(E^*_0,E^*_1\) and \(E^*_2\) as elements of \(X_0\).

\((S^{*},I^{*})\in X_0\) is an equilibrium for \((Sys_d)\) if and only if \((S^{*},I^{*})\in \varDelta \) satisfies the algebraic equation system

As above, we call an equilibrium \((S^{*},I^{*})\) disease-free if \(I^*=0\), and endemic if \(I^*>0\).

By calculating the solutions of (6), we obtain the following result.

Proposition 1

The unique disease-free equilibrium for the delayed relay system \(( Sys_d )\) is \( E^*_0 \in X_0 \), and it exists for all choices of parameters.

If \( {\mathcal {R}}_{0}\le 1\), then there is no endemic equilibrium for \(( Sys_d )\). If \( {\mathcal {R}}_{0}>1\), then we distinguish three cases.

-

(a)

If

$$\begin{aligned} {\mathcal {R}}_{0}>1\quad \text {and} \quad {\mathcal {R}}_{0}[\mu -(\mu +\gamma )k]<\mu , \end{aligned}$$(C.1)then \( E^*_1\in X_0 \) is the unique endemic equilibrium for \(( Sys_d )\).

-

(b)

If

$$\begin{aligned} \mu \le {\mathcal {R}}_{0}[\mu -(\mu +\gamma )k]<\mu /(1-u_*), \end{aligned}$$(C.2)then there is no endemic equilibrium for \(( Sys_d )\).

-

(c)

If

$$\begin{aligned} {\mathcal {R}}_{0}[\mu -(\mu +\gamma )k]\ge \mu /(1-u_*), \end{aligned}$$(C.3)then \( E^*_2 \in X_0 \) is the unique endemic equilibrium.

Note that if either (C.2) or (C.3) holds, then necessarily \({\mathcal {R}}_{0}>1\). In addition, conditions (C.1), (C.2) and (C.3) together cover the case \({\mathcal {R}}_{0}>1\).

Proof

It is easy to see that \( (S^{*},0) \) satisfies (6) if and only if \(S^{*}=S^{*}_{0}=1\). Moreover, \( (S^{*}_{0},I^{*}_{0})=(1,0) \) is a solution of (6) without any restrictions on the parameters. So the first statement of the proposition is true.

We may now assume that \(I_*>0\) and thus \( S^{*}<1 \). Let us divide (6) by \((\mu +\gamma )\) and examine the equivalent form

As \( I^{*}\ne 0 \), the second equation gives that

It comes from \(S^{*}<1\) and the definition of u that (7) cannot be satisfied if \( {\mathcal {R}}_{0}\le 1 \), so in that case there is no endemic equilibrium.

If \( {\mathcal {R}}_{0}>1 \), then we need to distinguish two cases. If \( 0<I^{*}<k \) and hence \(u(I_*)=0\), then one can easily see that \( (S^{*},I^{*})=(S^{*}_1,I^{*}_1). \) If \( I^{*}\ge k \) and \(u(I_*)=u_*\), then \( (S^{*},I^{*})=(S^{*}_2,I^{*}_2).\)

To complete the proof, we need to guarantee that \( 0<I^{*}_{1}<k \) and \( I^{*}_{2}\ge k \). Using \( \beta ={\mathcal {R}}_{0}(\mu +\gamma ) ,\) one can show that inequality

is satisfied if and only if

Similarly, \( I^{*}_{2}\ge k \) is equivalent to

Statements (a)–(c) of the proposition follow from the calculations above. \(\square \)

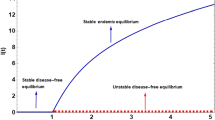

In Fig. 1 we divide the \((k,u_*)\) plane into three regions acoording to Cases (a)–(c) of Proposition 1 in order to show the interplay between threshold level k and control intensity \(u_*\).

4 Construction of Solutions

In this section we show that if \((S_0,\varphi )\in X_0 \) (\((S_0,\varphi )\in X_1 \)), then the solution (S, I) exists, and \((S(t),I_t)\in X_0 \) (\((S(t),I_t)\in X_1 \)) for each \(t\ge 0\).

First we need the following result for the ordinary systems \((Sys_f)\) and \((Sys_c)\).

Proposition 2

Let \(*\in \{f,c\}\). For any \(k\in (0,1)\) and any non-constant solution \((S_*,I_*)\) of \((Sys_*)\), the function

has a finite number of zeros on each interval of finite length.

Proof

We give a proof for the free system \((Sys_f)\). The proof for \((Sys_c)\) is analogous.

Consider the second equation of \(( Sys_f )\):

If \(R_0\le 1\) and \(I_f(t)=k\in (0,1)\) for some \(t\ge 0\), then \( S_f(t)\le 1- I_f(t)<1 \) and \(I'_f(t)<0\). The statement is clearly true in this case.

Now assume that \(R_0>1\). Recall from [2] that

is a Lyapunov function for \((Sys_f)\), and \({\dot{V}}(S,I)<0\) for all \((S,I)\in \varDelta _1{\setminus } \{E_1^*\}\). For any \(k\in (0,1){\setminus }\{I_1^*\}\), consider the nontrivial level set

which is a simple closed curve. The property \({\dot{V}}(S,I)<0\) guarantees that \(int (H_k)\) is positively invariant for \((Sys_f)\), where \(int (H_k)\) denotes the interior of \(H_k\).

Observe that \((S_1^*,k)\in H_k\). One can easily check that \([0,1]\ni S\mapsto V(S,k)\in {\mathbb {R}}\) has a strict minimum at \(S=S_1^*\), which implies that the segment \(I=k\) and \(H_k\) have no common point besides \((S_1^*,k)\). The segment \(I=k\) is tangential to \(H_k\) at \((S_1^*,k)\), see Fig. 2.

We also see from (8) that if \(I_f(t)>0\) for some \(t\in {\mathbb {R}}\), then

and

A direct consequence of (9) is the following observation for a non-constant solution \((S_f,I_f)\). If there exists \({\tilde{t}}\ge 0\) such that \(I_f\left( {\tilde{t}}\right) =k\) and \(I'_f\left( {\tilde{t}}\right) =0\), then \(S_f\left( {\tilde{t}}\right) =S_1^*\) by (9). Due to the Lyapunov function, there are no periodic solutions, hence there is no \(t\ne {\tilde{t}}\) such that \((S_f(t),I_f(t))=(S_1^*,k)\). This yields, again by (9), that if \(I_f(t)=k\) for some \(t\in [0,\infty ){\setminus }\{{\tilde{t}}\}\), then \(I'_f(t)\ne 0\).

Now suppose for contradiction that for some \(k\in (0,1)\) and non-constant solution \((S_f,I_f)\), the function \([0,\infty )\ni s\mapsto I_f(s)-k\in {\mathbb {R}}\) has an infinite number of zeros in a finite closed interval \(J\subset [0,\infty )\). Let \(B=\{t\in J:I_f(t)=k\}.\) The compactness of J ensures that B has an accumulation point \(t_a\) in J. Necessarily \(I_f(t_a)=k\).

Let \(\varepsilon >0\) be arbitrary. Next we show that B has elements \(t_1<t_2<t_3\) in \((t_a-\varepsilon , t_a+\varepsilon )\) such that

One can prove this claim as follows. Either \((t_a-\varepsilon ,t_a)\) or \((t_a,t_a+\varepsilon )\) contains elements of B arbitrary close to \(t_a\). Suppose \((t_a-\varepsilon ,t_a)\) is such an interval. By decreasing \(\varepsilon \), we may assume that the point \({\tilde{t}}\) (if exists) is not in \((t_a-\varepsilon ,t_a)\), and hence

Choose any \(t_1\in B\cap (t_a-\varepsilon ,t_a)\) with \(I'_f(t_1)<0\). It is easy to see that such \(t_1\) exists, otherwise we cannot have several zeros in \((t_a-\varepsilon ,t_a)\). Then one can give \(\delta _1>0\) with \(t_1+\delta _1<t_a\) such that \(I_f(t)<k\) for \(t\in (t_1,t_{1}+\delta _1)\). As \(B\cap (t_1+\delta _1,t_a)\) is bounded and nonempty (actually it has an infinite number of elements), the infimum \(t_2=\inf \{B\cap (t_1+\delta _1,t_a)\}\) exists. It is clear that \(t_1<t_2<t_a\), and \(I_f(t_2)=k\) by the continuity of \(I_f\). Observation (11) guarantees that \(I'_f(t_2)\ne 0\). It comes from the definition of \(t_2\) that \(I_f(t)<k\) for \(t\in (t_1,t_2)\) and \(I'_f(t_2)>0\). As next step, one can give \(\delta _2>0\) such that \(t_2+\delta <t_a\) and \(I_f(t)>k\) for \(t\in (t_2,t_{2}+\delta _2)\). Set \(t_3=\inf \{B\cap (t_2+\delta _2,t_a)\}\). Then \(t_3\) satisfies the properties given in the claim. In the second case, when B has infinite elements in \((t_a,t_a+\varepsilon )\), we can find \(t_3\) first, then \(t_2\) and \(t_1\) in an analogous way.

We claim that \(I_f'(t_a)=0\), and therefore \(S_f(t_a)=S_1^*\) (that is, \(t_a= {\tilde{t}}\)). Indeed, if \(I_f'(t_a)\) is positive (negative), then \(I_f'(t)\) is positive (negative) for all \(t\in B\) in a small neighbourhood of \(t_a\) by the continuous differentiability of \(I_f\). This contradicts our previous claim. So \(S_f(t_a)=S_1^*\).

As the solution is non-constant, \((S_f(t_a),I_f(t_a))=(S_1^*,k)\ne (S_1^*,I_1^*)\), and we conclude that \(k\ne I_1^*\). We consider the case \(k>I_1*\). (The case \(k<I_1*\) can be handled in similarly.)

Recall that \((S_f(t_a),I_f(t_a))=(S_1^*,k)\) is the intersection point of the segment \(I=k\) and \(H_k\). We have seen that there exist \(t_1\) and \(t_{2}\) arbitrary close to \(t_a\) such that \(I_f(t_1)=I_f(t_{2})=k\), \(I'_f(t_1)<0<I'_f(t_{2})\) and \(I_f(t)<k\) for \(t\in (t_1,t_{2})\). Remark (10) implies that \(S_f(t_1)<S^{*}_{1}<S_f(t_{2})\). We may also achieve (using the boundedness of \(S'_f\) and \(I'_f\)) that \((S_f(t),I_f(t))\) is arbitrary close to \((S_1^*,k)\) on \((t_1,t_{2})\). This gives the existence of \(t_*\in (t_1,t_{2})\) with \((S_f(t_*),I_f(t_*))\in int(H_k)\). The positive invariance of \(int(H_k)\) then gives \((S_f(t),I_f(t))\in int(H_k)\) for all \(t\ge t_*\) contradicting \(I_f(t_{2})=k\).

This means that our initial assumption was wrong, and the proposition is true also in the \(R_0>1\) case.\(\square \)

Now we ready to prove the positive invariance of \(X_0\).

Proposition 3

If \((S_0,\varphi )\in X_0\), then a unique solution (S, I) exists, and \((S(t),I_t)\in X_0\) for each \(t\ge 0\).

Proof

Set

such that \(\varphi (\xi )< k\) on intervals of the form \((-\tau +t_{2n},-\tau +t_{2n+1})\) and \(\varphi (\xi )>k\) on intervals of the form \((-\tau +t_{2n-1},-\tau +t_{2n})\), where \(n\in [0,N/2]\) is an integer. (We omit the case when \(\varphi (\xi )> k\) on \((-\tau +t_{2n},-\tau +t_{2n+1})\) and \(\varphi (\xi )<k\) on \((-\tau +t_{2n-1},-\tau +t_{2n})\) because that can be handled analogously.)

Under the assumptions above, \(I(t-\tau )<k\) and \(u(I(t-\tau ))=0\) for \(t\in (0,t_1)\). Hence the solution (S, I) of \((Sys_d)\) coincides with a solution of \((Sys_f)\) on \([0,t_1]\):

for \(t\in [0,t_1]\). Since \(I(t-\tau )>k\) and thus \(u(I(t-\tau ))=u_*\) for \(t\in (t_1,t_2)\), we see that

for \(t\in [t_1,t_2]\). Similarly, (S, I) is given by a specific solution of \((Sys_f)\) or \((Sys_c)\) on all intervals of the form \([t_{m-1},t_m]\), where \(m\in \left\{ 1,\ldots ,N\right\} \). Hence the solution exists on \([0,\tau ]\).

As the functions \(t\mapsto I_f(t)-k\) and \(t\mapsto I_c(t)-k\) have finite number of sign changes on intervals of finite length by Proposition 2, we deduce that \(t\mapsto I(t)-k\) also admits a finite number of sign changes on \([0,\tau ]\).

Iterating this argument first for \([\tau ,2\tau ]\), then for all intervals of the form \([j\tau ,(j+1)\tau ]\), \(j\ge 2\), we see that the solution exists on the positive real line. The uniqueness of (S, I) comes at once from the uniqueness of solutions for \((Sys_f)\) and \((Sys_c)\). In addition, it is clear that \(t\mapsto I(t)-k\) has a finite number of sign changes on intervals of finite length. The way we obtain the solutions of \((Sys_d)\) and the positive invariance of \(\varDelta \) for \((Sys_f)\) and \((Sys_c)\) also imply that \(S(t)\in [0,1]\), \(I(t)\in [0,1] \) and \( S(t)+I(t)\le 1\) for all \(t\ge 0\). Summing up, \((S(t),I_t)\in X_0\) for all \(t\ge 0\).\(\square \)

Since the solutions of \((Sys_d)\) with initial data in \(X_0\) are determined by the solutions of \((Sys_f)\) and \((Sys_c)\) as in the previous proof, and as the set \(\varDelta _1=\{ (S,I) \in \varDelta : I>0 \}\) is positively invariant for both \((Sys_f)\) and \((Sys_c)\), we see that \(X_1\) is positively invariant for \((Sys_d)\) too.

5 Threshold Dynamics: Disease Extinction and Persistence

Theorem 1

If \( {\mathcal {R}}_{0} \le 1 \), then \( E^*_0 \) is globally asymptotically stable for the delayed relay system \(( Sys_d )\) (that is, \( E^*_0 \) is asymptotically stable and attracts \(X_0\)). If \( {\mathcal {R}}_{0} >1 \), then \( E^*_0 \) is unstable w.r.t. \(( Sys_d )\), and the disease uniformly persists in the population.

Proof

First note that the solutions of \((Sys_d)\) coincide with the solutions of the free system \((Sys_f)\) in a small neighbourhood of \( E^*_0 \). Therefore \( E^*_0 \) is a stable equilibrium for \(( Sys_d )\) if and only if it is stable for \((Sys_f)\).

Let \( {\mathcal {R}}_{0}\le 1 \). We only need to prove the global attractivity of \( E^*_0 \) on \(X_0\). Suppose for contradiction that I(t) does not converge to 0 as \(t\rightarrow \infty \) for some solution (S, I). By the second equation of \(( Sys_d )\),

that is, I is nonincreasing. As I is nonnegative and does not converge to 0, necessarily there exists a constant \( c>0 \) such that \(I(t)\ge c\) for all \(t\ge 0\) and \(I(t)\rightarrow c\) as \( t\rightarrow \infty \). Then \( S(t)\le 1-c \) and

for all \(t\ge 0\). It follows that

which implies \( I(T)<0 \) for all sufficiently large T, a contradiction. So \( I(t)\rightarrow 0 \) as \(t\rightarrow \infty \).

Next we prove that if \( {\mathcal {R}}_{0}\le 1 \), then \(S(t)\rightarrow 1\) as \(t\rightarrow \infty \) for all solutions (S, I). It is clear from the previous paragraph that there exists \( T(\varepsilon ) \) for each \( \varepsilon >0 \) such that \( I(t)<\varepsilon \) for all \( t\ge T(\varepsilon ). \) Then for \( t\ge T(\varepsilon ), \)

which implies

Note that \( \varepsilon >0 \) can be arbitrary small. Thereby

We know on the other hand that \( S(t)\le 1 \) for all \( t\ge 0. \) Summing up, \( \lim _{t\rightarrow \infty } S(t) \) exists and equals one.

We have verified that \(S(t)\rightarrow 1\) and \(I(t)\rightarrow 0\) as \(t\rightarrow \infty \) for every solution (S, I) if \( {\mathcal {R}}_{0}\le 1 \).

To show the persistence, let \( {\mathcal {R}}_{0}>1 \), and consider a solution with \(I(0)>0\). If there exists arbitrarily large t with \(I(t)\ge k\), then \(\limsup _{t \rightarrow \infty } I(t)\ge k\). Otherwise, there is a \(t_*\) such that \(I(t)<k\) for all \(t>t_*\). In this case, the solution follows \((Sys_f)\) for \(t\ge t_*+\tau \):

Then \(\lim _{t \rightarrow \infty } I(t)= I_1^*.\) In any case, we can conclude that \(\limsup _{t \rightarrow \infty } I(t)\ge \min \{k,I_1^*\},\) which means uniform weak persistence. Since the solutions of \((Sys_f)\) and \((Sys_c)\) both have uniformly bounded derivatives on \(\varDelta \), by the Arzelà-Ascoli theorem our solution operators \(\varPhi (t): X_0 \ni (S_0,\varphi ) \mapsto (S_t,I_t) \in X_0\) are compact for \(t>\tau \), hence the semiflow \(\varPhi \) has a compact attractor in \(X_0\). We can apply Corollary 4.8 from [10] to conclude (strongly) uniform persistence: there exists a \(\delta >0\) such that for all solutions with \(I(0)>0\), \(\liminf _{t \rightarrow \infty } I(t)\ge \delta .\)\(\square \)

6 Case (a): \(E_1^*\) is GAS for Large k

In this section let \({\mathcal {R}}_0>1\) and \(k>k_0\), where \( k_0=1-1/{\mathcal {R}}_0 \). It is easy to see that these conditions imply (C.1). Hence Proposition 1 gives that \( E_1^* \) is the unique endemic equilibrium for \( (Sys_d) \) and \( I^*_1<k \).

Figure 3 shows the segment \(I=k_0\) in \(\varDelta \).

The main result of this section is the following global stability theorem.

Theorem 2

If \({\mathcal {R}}_0>1\) and \( k>k_0 \), then \( E_1^* \) is asymptotically stable with respect to \( (Sys_d) \), and it attracts the set \(X_1\).

The proof is based on the next simple observation.

Proposition 4

Assume that \({\mathcal {R}}_0>1\) and \(k>k_0\). If \( I(t_0)<k \) for some \( t_0\ge 0 \), then \( I(t)<k \) for all \( t\in [t_0,\infty ) \).

Proof

Suppose for contradiction that there exists \( t_*>t_0 \) such that \( I(t)<k \) for \( t\in [t_0,t_*)\) and \( I(t_*)=k \). Then necessarily \( I'(t_*)\ge 0 \). On the other hand,

and thus

independently of the value of \(I(t_*-\tau )\). This is a contradiction, so the proposition is true. \(\square \)

Proof of Theorem 2

As \(I_1^*<k\), the solutions of \((Sys_d)\) coincide with solutions of the free system \((Sys_f)\) in a small neighborhood of \( E^*_1 \). Since \( E^*_1 \) is stable for \( (Sys_f )\), this fact implies that \( E^*_1 \) is a stable equilibrium also for \(( Sys_d )\). We only need to prove that the region of attraction is \(X_1\).

Consider an arbitrary solution (S, I) of \( (Sys_d) \) with initial data in \(X_1\).

We claim there exists \( t_0\ge 0 \) such that \( I(t_0)<k \). Indeed, suppose for contradiction that \( I(t)\ge k \) for all \( t \in [0,\infty ) \). Then we have

If \({\mathcal {R}}_{u_*}>1\), then \(E^*_2 \) attracts the set \(\varDelta _1=\{ (S,I) \in \varDelta :I>0 \}\) w.r.t. \((Sys_c)\), and hence \( I(t)\rightarrow I_2^*<k\) as \(t\rightarrow \infty \). If \({\mathcal {R}}_{u_*}\le 1\), then \( I(t)\rightarrow 0<k\) as \(t\rightarrow \infty \) by the global attractivity of \(E^*_0 \) for \((Sys_c)\). In both cases we obtained a contradiction.

One can now use Proposition 4 with this \( t_0 \) to obtain that \( I(t)<k \) for \( t\in [t_0,\infty )\). Then (S, I) coincides with the subsequent solution of \( (Sys_f) \) on \( [t_0+\tau ,\infty ) \):

Recall that \(E^*_1 \) attracts \(\varDelta _1\) w.r.t. \((Sys_f)\). Also note that \(I(t)>0\) for all \(t\ge 0\) by the positive invariance of \(X_1\). We conclude that \((S(t),I(t))\rightarrow E^*_1 \) as \( t\rightarrow \infty \).

Summing up, \( E^*_1 \) is asymptotically stable and attracts \(X_1\) w.r.t. \( (Sys_d) \). \(\square \)

7 Case (b): Periodic Orbits in the Absence of Endemic Equilibria

Recall from Proposition 1 that (\( Sys_d \)) has no endemic equilibria if

In more detail, condition (12) implies that the second coordinate \(I^*_1\) of \( E^*_1 \) is greater than k (see the proof of Proposition 1), and hence \( E^*_1 \) is not an equilibrium for (\( Sys_d \)). If \( {\mathcal {R}}_{u_*}>1 \), then \( E^*_2 \) exists for \((Sys_c)\), but \( I^*_2<k\), and thus \( E^*_2 \) is not an equilibrium for (\( Sys_d \)) either. If \( {\mathcal {R}}_{u_*}\le 1 \), then \(E^*_0\) is the unique equilibrium for both \((Sys_c)\) and \((Sys_d)\).

The aim of this section is to show that the absence of endemic equilibria implies the existence of periodic orbits in the \( {\mathcal {R}}_0>1 \) case – at least for small \(\tau \).

Theorem 3

If (12) holds and \( \tau \) is small enough, then the delayed relay system \(( Sys_d )\) has a periodic solution.

In order to prove this theorem, first we need to recall how the solutions of \((Sys_f)\) and \((Sys_c)\) behave in \(\varDelta _1\).

If \( {\mathcal {R}}_0>1 \), i.e., \(E^*_1=(S^*_1,I^*_1) \) is an endemic equilibrium for \( (Sys_f) \), then the curves \( S=S^*_1 \) and \( (\mu +\beta I)S=\mu \) are the null-isoclines for \( (Sys_f) \), see Fig. 4a. Analyzing the vector field, one sees that

Moreover, the above inequalities are strict in the interior of \( A_i \), \( i \in \{1,2,3,4\} \).

The null-isoclines and the vector field for (a): the free system \( (Sys_f )\) in case \( {\mathcal {R}}_0>1 \), (b): the control system \( (Sys_c )\) in case \( {\mathcal {R}}_{u_*}>1 \). The isoclinic curves for the free system \( (Sys_f) \) are \(g^1_f= \{(S,I)\in \varDelta _1: \mu -\mu S - \beta SI=0\} \) and \(g^2_f= \{(S,I)\in \varDelta _1: S=S^*_1\} \). The isoclinic curves for the control system \( (Sys_c) \) are \(g^1_c= \{(S,I)\in \varDelta _1: \mu -\mu S - (1-u_*)\beta SI=0\} \) and \( g^2_c=\{(S,I)\in \varDelta _1: S=S^*_2\} \)

It is clear from these observations and the positive invariance of \(\varDelta _1\) that the solutions of \((Sys_f)\) behave as follows.

Remark 1

Let \( {\mathcal {R}}_0>1 \).

-

(i)

Assume that \( (S_0,I_0) \in A_i\), where \( i\in \{1,3\} \). Then either

$$\begin{aligned} \left( S_f(t),I_f(t)\right) = \left( S_f(t;S_0,I_0),I_f(t;S_0,I_0)\right) \in A_i \quad \text{ for } \text{ all } t\ge 0 \end{aligned}$$(in this case the solution converges to \( E^*_1 \) in \(A_i \)), or there exist \( 0<T_1<T_2 \) such that

$$\begin{aligned} \left( S_f(t),I_f(t)\right) \in A_i \quad \text{ for } t \in [0,T_1) \end{aligned}$$(13)and

$$\begin{aligned} \left( S_f(t),I_f(t)\right) \in A_{i+1} \quad \text{ for } t \in [T_1,T_2) \end{aligned}$$(14)(that is, the solution leaves \( A_i \) through the boundary of \( A_{i+1} \)).

-

(ii)

Each solution leaves \( A_i \), \( i\in \{2,4\} \), through the boundary of \( A_{i+1} \): If \( (S_0,I_0) \in A_i\), where \( i\in \{2,4\} \), then there exist \( 0<T_1<T_2 \) such that (13) and (14) hold. Here the index is considered modulo 4, so \( A_5 \) stands for \( A_1 \).

-

(iii)

Assume that \( k<I^*_1 \). If \( 0<I_f(t_*)<k \) for some \( t_* \), then there exists \( t_{**}>t_* \) such that

$$\begin{aligned} S_f(t_{**}) \in [S^*_1,1-k] \quad \text{ and }\quad I_f(t_{**})=k. \end{aligned}$$

Let us now consider \((Sys_c)\). If \( {\mathcal {R}}_{u_*}>1 \), i.e., if \( E^*_2 \) is an endemic equilibrium for \((Sys_c)\), then \( \varDelta _1{\setminus } \{E^*_2 \}\) can be divided up into four subsets \( B_1,B_2,B_3,B_4 \) in an analogous way using the null-isoclines \( S=S^*_2 \) and \( (\mu +(1-u_*)\beta I)S=\mu \), see Fig. 4b. By analyzing the vector field, we get the subsequent information on the behavior of solutions.

Remark 2

If \( {\mathcal {R}}_{u_*}>1 \), then the analogues of Remark 1(i) and (ii) hold for the solutions of \( (Sys_c) \) with \(B_i\) standing instead of \(A_i\), \(i\in \{1,2,3,4\}\). In addition, if \( k>I^*_2 \) and \( I_c(t_*)>k \) for some \( t_* \), then there exist \( t_{**}>t_* \) such that \( S_c(t_{**}) \in [0,S^*_2] \) and \( I_c(t_{**})=k \).

Theorem 3 is the consequence of the subsequent two propositions.

Proposition 5

Assume (12).

- (i):

-

Consider a solution \( (S_f,I_f) \) of \( (Sys_f )\) with \( S_f(0)=S_0\in [S^*_1,1-k] \) and \( I_f(0)=k. \) There exists a time \( T_f>0 \) (independent of \( S_0 \)) such that \( I_f(t)>k \) for \( t \in (0, T_f]. \)

- (ii):

-

Assume in addition that \( {\mathcal {R}}_{u_*}>1 \). Consider a solution \( (S_c,I_c) \) of \( (Sys_c )\) with \( S_c(0)=S_0\in [0,S^*_2] \) and \( I_c(0)=k. \) There exists a time \( T_c>0 \) (independent of \( S_0 \)) such that \( I_c(t)<k \) for \( t \in (0, T_c]. \)

Proof

(i) Condition (12) implies that \(I^*_1>k\). Therefore \( (S_f(0),I_f(0))=(S_0,k) \in A_4\cup A_1 \), see Fig. 4a. It follows from Remark 1(i) and (ii) that either \( I_f(t)>k \) for all \( t>0 \) (the proof is complete in this case with any \( T_f >0\)), or there exists \( T>0 \) such that \( I_f(t)>k \) for all \( t\in (0,T) \) and \( I_f(T) =k \). In the latter case the total change of \( I_f \) on the interval [0, T] is greater than \( 2(I^*_1-k). \) On the other hand, it comes from the \( I_f\)-equation that \( |I_f'(t) |\le \beta +\gamma +\mu \) for all \( t\ge 0. \) Therefore

So set \( T_f=2(I^*_1-k)/(\beta +\gamma +\mu ).\)

(ii) Under the assumptions of the proposition, \( E^*_2 \) is an endemic equilibrium for \( (Sys_c) \) with \(I^*_2<k\), and \( (S_0,k) \in B_2\cup B_3 \), see Fig. 4b. One may apply a reasoning analogous to the proof of statement (i) to show that statement (ii) is true with

\(\square \)

Now consider the subset

Proposition 6

If \( (S_0,\varphi ) \in {\mathcal {A}} \), then the solution \( (S,I)=(S(.;S_0,\varphi ),I(.;S_0,\varphi )) \) of \(( Sys_d )\) is independent of \( \varphi . \) If (12) holds and \( \tau \) is small enough, then there exists a smallest \( t_1=t_1(S_0)>0 \) such that \( (S(t_1),I_{t_1}) \in {\mathcal {A}} \). Moreover, \( S(t_1) \) depends continuously on \( S_0. \)

Proof

For any \( (S_0,\varphi ) \in {\mathcal {A}} \), the solution \( (S,I)=\big (S(.;S_0,\varphi ),I(.;S_0,\varphi )\big ) \) coincides with the subsequent solution of \( (Sys_f )\) on \( [0,\tau ]: \)

see curve \(\varGamma _1\) on Fig. 5.

The solution \( (S,I)=(S(.;S_0,\varphi ),I(.;S_0,\varphi )) \) of \(( Sys_d )\) for \((S_0,\varphi )\in {\mathcal {A}}\) under conditions (12) and \( {\mathcal {R}}_{u_*}>1 \). The blue solid curves \( \varGamma _1\) and \( \varGamma _3\) represent (S, I) when it follows \( (Sys_f) \). The solid red curve \( \varGamma _2 \) represents (S, I) when it follows \( (Sys_c) \). The null-isoclines of \( (Sys_f) \) and \( (Sys_c) \) are the dotted blue and dashed red curves, respectively. The parameters are: \( k= 0.26\), \(\gamma = 1.38\), \(\beta =15.8\), \(\mu =1.3\), \(\tau =1\), \(u_*=0.76\), \(S_0=0.58 \)

It comes from Proposition 5(i) that if \( \tau \le T_f, \) then \( I(t)=I_f(t;S_0,k)>k \) for \( t\in (0,\tau ]\).

Observe that if \( I(t)\ge k \) for \( t\in [0,T] \) with any \( T>\tau , \) then (S, I) coincides with the following solution of \( (Sys_c) \) on \([\tau ,T+\tau ]: \)

see curve \(\varGamma _2\) on Fig. 5.

Next we show the existence of \( t_0>\tau \) such that \( I(t_0)=k\) and \(I(t)>k\) for \(t \in (0,t_0)\). We need to distinguish two cases.

Case \( {\mathcal {R}}_{u_*}\le 1: \) Suppose for contradiction that \( I(t)>k \) for all \(t \in (0,\infty )\) and hence \( I(t)=I_c(t-\tau ;S(\tau ),I(\tau )) \) for \( t \in [\tau ,\infty ). \) The disease free equilibrium \( E^*_0 \) is globally asymptotically stable for \( (Sys_c )\) if \( {\mathcal {R}}_{u_*}\le 1 \), i.e., \( I_c(t)\rightarrow 0\) as \(t\rightarrow \infty .\) This is a contradiction.

Case \( {\mathcal {R}}_{u_*}>1: \) The existence of \( t_0 \) comes from \( I(\tau )>k>I^*_2 \), observation (16) and Remark 2. In this case \( S(t_0) \in [0,S^*_2] \).

It is clear that for \( t\in [t_0,t_0+\tau ] \),

Next we claim that \( I(t)<k \) for \( (t_0,t_0+\tau ] \) if \( \tau \) is small enough. If \( {\mathcal {R}}_{u_*}\leqslant 1 \), then it comes from the \( I_c\)-equation and \( S(t)<1 \) that \( I'(t)<0 \) for \( t \in [t_0,t_0+\tau ) \). So the claim holds in this case. If \( {\mathcal {R}}_{u_*}>1 \), then we apply Proposition 5(ii). It yields that \( I(t)=I_c(t-t_0;S(t_0),k)<k \) for \( (t_0,t_0+\tau ] \) if \( \tau <T_c. \)

Our last observation implies that

for \( t\in [t_0+\tau ,t_0+2\tau ]\). Moreover, if \( I(t)<k \) for \( t \in (t_0,t_1) \) with some \(t_1>t_0+\tau \), then equations (18) hold for all \( t\in [t_0+\tau ,t_1+\tau ]\). Arguing as before, one can actually verify the existence of \(t_1>t_0+\tau \) such that \(I(t)<k \) for \( t \in (t_0,t_1) \), \( S(t_1) \in [S^*_1,1-k] \) and \( I(t_1)=k. \) See curve \(\varGamma _3\) on Fig. 5.

As \( S(t_1) \in [S^*_1,1-k]\), \(I_{t_1}(\theta )<k \) for \( \theta \in [-\tau ,0) \) and \( I_{t_1}(0)=k \), we conclude that \( ( S(t_1),I_{t_1}) \in {\mathcal {A}}. \)

The statement that (S, I) is independent of \( \varphi \) is clear from the first step of the proof.

The continuous dependence of \( S(t_1) \) from \( S_0 \) comes from the fact the solutions of \( (Sys_f )\) and \( (Sys_c )\) depend continuously on initial data. \(\square \)

Proof of Theorem 3

Proposition 6 allows us to define a continuous return map

By the Schauder fixed-point theorem, P admits a fixed point \( \hat{S_0} \in [S^*_1,1-k] \). In addition, let \( {\hat{\varphi }}= I_{t_1}(.,\hat{S_0},\varphi ), \) where \( \varphi \in C([-\tau ,0],{\mathbb {R}}) \) is an arbitrary function with \( \varphi (0)=k \) and \( \varphi (\theta )<k \) for \( \theta \in [-\tau ,0] \). By Proposition 6, \( {\hat{\varphi }} \) is independent of \( \varphi \) and \( (\hat{S_0},{\hat{\varphi }}) \in {\mathcal {A}} \). It is now obvious that solution \( (S(.,\hat{S_0},{\hat{\varphi }}),I(.,\hat{S_0},{\hat{\varphi }})) \) of \(( Sys_d )\) is periodic with minimal period \( t_1 \). \(\square \)

It follows from the proof above that Theorem 3 holds if

Numerical investigations suggest that the theorem holds for larger choices of \(\tau \) as well. This is not surprising as our estimates in Proposition 5 were not sharp (Figs. 6, 7).

8 Case (c): \( E_2^* \) is GAS for Sufficiently Small k

The purpose of this section is to show that \( E_2^* \) attracts \(X_1\) under certain conditions.

Theorem 4

Assume that

and

If \(k\in (0,k_{1})\), then \(E_{2}^{*}\) is the unique endemic equilibrium for \((Sys_{d})\), it is asymptotically stable, and the region of attraction is \(X_1\).

Note that it is possible to satisfy both inequalities (19) and (20) at the same time: for example, if \(\beta =15,\)\(\mu =0.4\), \(\gamma =1\) and \(u_{*}=0.5\), then both (19) and (20) hold.

Also observe that condition \(k\in (0,k_{1})\) implies (C.3). Therefore Proposition 1 already guarantees that \(E_{2}^{*}\) is the unique endemic equilibrium for \((Sys_{d})\). For the second coordinate of \(E_{1}^{*}\), we have \( I^*_1\ge k \).

Figure 8 explains the geometrical position of the segment \(I=k_1\).

Set

It is straightforward to check that both \(E_{1}^{*}\) and \(E_{2}^{*}\) belong to \(\varDelta _2\).

We need the forthcoming two results before proving Theorem 4. First we show that all solutions of \((Sys_{d})\) leave \(\varDelta {\setminus }\varDelta _2\) in finite time. Then we verify the positive invariance of \(\varDelta _2\) independently of the choice of parameters.

Proposition 7

Assume (19) and suppose that \((S(0),I(0))\in \varDelta {\setminus }\varDelta _2\) for a solution (S, I) of \((Sys_d)\). Then there exists \(t_{0}>0\) such that \((S(t_0),I(t_0))\in \varDelta _2\).

Proof

First we claim that if (19) holds, then \(S_f'\) and \(S_c'\) are both positive on the closure

of \(\varDelta {\setminus }\varDelta _2\). Recall from Fig. 4 in Sect. 7 that \(S_f'\) and \(S_c'\) are both positive on the subset

Hence it suffices to show that

We investigate this inequality in the form

The left-hand side is independent of S. Examining the derivative of the right-hand side, it is easy to see that the right-hand side is minimal for \(S=\sqrt{\mu /\beta }\), and it takes the value

We see from assumption (19) that inequality (21) holds for \(S=\sqrt{\mu /\beta }\). Thus it holds for all \(S\in [0,1]\). The proof of the claim is complete.

Let \(M_f\) and \(M_c\) be the minimum of \(S_f'\) and \(S_c'\) on \(\overline{\varDelta {\setminus }\varDelta _2}\), respectively. As \(S_f'\) and \(S_c'\) are both continuous and positive on the compact subset \(\overline{\varDelta {\setminus }\varDelta _2}\), the constants \(M_f\) and \(M_c\) are well-defined and positive.

Now suppose that \((S(0),I(0))\in \varDelta {\setminus }\varDelta _2\) for a solution (S, I) of \((Sys_d)\). As long as \((S(t),I(t))\in \varDelta {\setminus }\varDelta _2\), we have \(S'(t)\ge \min \{M_f,M_c\}>0\). The boundedness of \(\varDelta {\setminus }\varDelta _2\) implies that the solution necessarily leaves \(\varDelta {\setminus }\varDelta _2\) (through the segment \(S+I=\mu /(\mu +\gamma )\)).\(\square \)

Proposition 8

If there exists \(t_{0}\ge 0\) such that \((S(t_{0}),I(t_{0}))\in \varDelta _2\), then \((S(t),I(t))\in \varDelta _2\) for all \(t\ge t_{0}.\)

Proof

Consider any solution (S, I) of \((Sys_d)\) with \(S(t_0)+I(t_0)\ge \mu /(\mu +\gamma )\). Adding up the equations of \((Sys_d)\), we obtain that

for all \(t>0\).

The solution of the ordinary differential equation

with initial data

is

Then, by the comparison theorem, \(S(t)+I(t)\ge u(t)\ge \mu /(\mu +\gamma )\) for all \(t\ge t_0\), i.e., \((S(t),I(t))\in \varDelta _2\) for all \(t\ge t_{0}.\)\(\square \)

Now we are ready to prove Theorem 4.

Proof of Theorem 4

Assumption \(k\in (0,k_{1})\) implies that

Therefore (C.3) holds, and \(E_{2}^{*}\) is the unique endemic equilibrium for \((Sys_{d})\) by Proposition 1. Inequality (22) is actually equivalent to \(I_2^*>k\), see the proof of Proposition 1. This observation and the fact that \(E_{2}^{*}\) is stable w.r.t. to \((Sys_{c})\) guarantees that it is stable w.r.t. to \((Sys_{d})\) too. We only need to show that if (S, I) is an arbitrary solution of \((Sys_d)\) with initial data in \(X_1\), then \((S(t),I(t))\rightarrow E^*_2 \) as \( t\rightarrow \infty \).

It comes from Propositions 7 and 8 that there exists \(t_0\ge 0\) such that \((S(t),I(t))\in \varDelta _2\) for all \(t\ge t_0\). The rest of the proof comes from two claims.

First we claim that there exists \( t_1\ge t_0 \) such that \( I(t_1)\ge k \). Indeed, suppose for contradiction that \( I(t)< k \) for all \( t \in [t_0,\infty ) \). Then we have

As we are in the \({\mathcal {R}}_{0}>1\) case, \(E^*_1 \) attracts \(\varDelta _1\) for \((Sys_f)\). It follows that \( I(t)\rightarrow I^*_1\) as \(t\rightarrow \infty \). This is a contradiction since condition (22) implies that \( I^*_1> k \).

Next we claim that if \( I(t_1)\ge k \) for some \( t_1\ge t_0 \), then \( I(t)\ge k \) for all \( t\in [t_1,\infty ) \). If this is not true, i.e., I(t) can be smaller than k for some \(t>t_1\), then there exists \(t_2>t_1\) such that \(I(t_2)=k\) and \(I'(t_2)\le 0\). However, as \((S(t_2),I(t_2))\in \varDelta _2\), we have

(Figure 8 also shows this property: If \((S(t_2),I(t_2))\in \varDelta _2\) and \(I(t_2)=k<k_1\), then necessarily \(S(t_2)>S_2^*\).) This means that

independently of the value of \(I(t_2-\tau )\). This contradicts our previous observation that \(I'(t_2)\le 0\). So \( I(t)\ge k \) for all \( t\in [t_1,\infty ) \).

It comes from our last result that solution (S, I) coincides with the subsequent solution of \( (Sys_c) \) on \( [t_1+\tau ,\infty ) \):

As \(E^*_2 \) attracts \(\varDelta _1\) w.r.t. \((Sys_c)\), we conclude that \((S(t),I(t))\rightarrow E^*_2 \) as \( t\rightarrow \infty \). The proof is complete. \(\square \)

9 Discussion

We have considered the dynamical consequences of switching to a control system in an SIR epidemic model, when the switch takes place with some delay after the solution crosses a switching manifold (i.e., when the density of infected individuals reaches a threshold level k).

Our results for (1)–(2) with delay \(\tau >0\) are summarized in Table 1. We have found that the behaviour of the system can be significantly different from switching models without delay. This can be easily seen by comparing Tables 1 and 2, which lists the findings of Xiao et al. [13] for system (1)–(2) in the case \(\tau =0\).

As one can see from Table 1, all solutions in \(\varDelta _1\) converge to an endemic equilibrium if \(\tau =0\) and \(R_0>1\). However, periodic orbits can exist in the delayed case: we constructed periodic solutions for some range of the parameters and for small positive delay (see Case (b)). These are slowly oscillating between the two sides of the switching manifold (meaning that if \(I(t_1)=I(t_2)=k\) for \(t_1<t_2\), then \(t_2-t_1>\tau \)). Numerical observations show that the periodic orbit persists by increasing the delay, and the oscillatory solution tends to approach the two stable equilibria corresponding to the free and the control systems.

On the other hand, our global stability results are delay independent [see Cases (a) and (c)]. Based on some numerical simulations, we conjecture that a stable periodic orbit may coexist with a stable equilibrium for some parameter configurations (when k is slightly smaller than \(I_2^*\) or slightly bigger than \(I_1^*\)), thus local stability of an endemic equilibrium does not always imply its global stability. We have observed this bistable situation for both small and large values of \(\tau \). The investigation of such cases is left for future work.

Figure 9 depicts how the maxima and minima of some solutions (calculated after long time integration) change if parameter k increases. This numerically generated diagram confirms the conjecture that a periodic orbit may coexist with a stable equilibrium for some parameter configurations.

Plot of the maxima and minima of the I-terms after long time integration for several initial data. The bifurcation parameter is k. The other parameters are \( u_*=0.76\), \(\gamma =1.38\), \(\beta =15.8\), \(\mu =1.3\) and \(\tau =1 \). The solution converges to \( E^{*}_{2}\) for small k, then to a periodic orbit as k increases, and then to \( E^{*}_{1}\) for large values of k

It may seem surprising that we do not necessarily obtain information on the dynamics in the non-delayed case by considering the delayed case with small values of \(\tau \). The underlying reason is the following: If \(\tau =0\) in Case (b), then there is a segment within the switching manifold \(I=k\) (the so-called sliding domain) along which the vector field corresponding to the free system points up, while the vector field of the control system points down. When a solution reaches the sliding domain, this incompatility between the free and the control systems can be resolved by a sliding mode control which is forcing the solution to remain on the sliding domain, and the solution may converge to a new equilibrium appearing there. In contrast, the solution curves of the delayed system cross the switching manifold transversally because this system reacts with a positive delay and because both vector fields are transversal to \(I=k\) (except at most one point). Therefore in Case (b) with \(\tau >0\) we observe solutions oscillating between the two sides of the switching manifold but no solutions moving along it.

From our results we can draw some conclusions about the potential intervention strategies. For a large threshold k, the control will eventually be turned off and solutions converge to the endemic equilibrium of the free system, and the control strategy has no effect whatsoever. If \(k<I_1^*\), then the control effort \(u_*\) also plays a role. If the control effort is weak, then \(I_2^*>k\) (see Fig. 1) and we can expect the control to be on for large times. Then the control strategy is reducing the infected population. Interestingly, in the presence of time delay, a strong control effort can induce periodic oscillations, and the peak of the periodic solution may be larger than the endemic level what we could achieve by a weaker control. If we do not want to tolerate high peaks in disease prevalence, we may choose a milder control strategy. Alternatively, we may try to reduce the delay as that leads to smaller oscillations, and then the periodic solution can be kept near the threshold k. Our results suggest that it may be worthwhile to continue research to the directions we initiated here, to have a better understanding of the effect of the interplay of control strategies and delays in implementation on the long term transmission dynamics.

References

Arino, J., McCluskey, C.C.: Effect of a sharp change of the incidence function on the dynamics of a simple disease. J. Biol. Dyn. 4(5), 490–505 (2010)

Korobeinikov, A., Wake, G.C.: Lyapunov functions and global stability for SIR, SIRS, and SIS epidemiological models. Appl. Math. Lett. 15(8), 955–960 (2002)

LeBlanc, V.G.: A degenerate Hopf bifurcation in retarded functional differential equations, and applications to endemic bubbles. J. Nonlinear Sci. 26(1), 1–25 (2016)

Liu, M., Liz, E., Röst, G.: Endemic bubbles generated by delayed behavioral response: global stability and bifurcation switches in an SIS model. SIAM J. Appl. Math. 75(1), 75–91 (2015)

Liu, M., Röst, G., Vas, G.: SIS model on homogeneous networks with threshold type delayed contact reduction. Comput. Math. Appl. 66(9), 1534–1546 (2013)

Liu, X., Stechlinski, P.: Transmission dynamics of a switched multi-city model with transport-related infections. Nonlinear Anal. Real World Appl. 14(1), 264–279 (2013)

Liu, X., Stechlinski, P.: Infectious Disease Modeling, a Hybrid System Approach, vol. 19. Springer, Berlin (2017)

Muqbel, K., Dénes, A., Röst, G.: Optimal temporary vaccination strategies for epidemic outbreaks. In: Mondaini, R.P. (ed.) Trends in Biomathematics: Mathematical Modeling for Health, Harvesting, and Population Dynamics, pp. 299–307. Springer, Cham (2019)

Sieber, J.: Dynamics of delayed relay systems. Nonlinearity 19(11), 2489 (2006)

Smith, H.L., Thieme, H.R.: Dynamical Systems and Population Persistence, vol. 118. American Mathematical Society, Providence (2011)

Wang, W.: Backward bifurcation of an epidemic model with treatment. Math. Biosci. 201(1–2), 58–71 (2006)

Wang, A., Xiao, Y.: Sliding bifurcation and global dynamics of a Filippov epidemic model with vaccination. Int. J. Bifurcat. Chaos 23(08), 1350144 (2013)

Xiao, Y., Xu, X., Tang, S.: Sliding mode control of outbreaks of emerging infectious diseases. Bull. Math. Biol. 74(10), 2403–2422 (2012)

Wang, A., Xiao, Y., Smith, R.: Dynamics of a non-smooth epidemic model with three thresholds. Theor. Biosci. 137, 1–19 (2019)

Wang, A., Xiao, Y., Zhu, H.: Dynamics of a Filippov epidemic model with limited hospital beds. Math. Biosci. Eng. 15(3), 739 (2017)

Zhou, W., Xiao, Y., Heffernan, J.M.: A two-thresholds policy to interrupt transmission of West Nile Virus to birds. J. Theor. Biol. 463, 22–46 (2019)

Acknowledgements

Open access funding provided by University of Szeged (SZTE).

Funding

KM was supported by NKFIH FK 124016. GV was supported by NKFIH K129322 and 20391-3/2018/FEKUSTRAT. GR was supported by EFOP-3.6.1-16-2016-00008 and NKFIH KKP 129877.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Muqbel, K., Vas, G. & Röst, G. Periodic Orbits and Global Stability for a Discontinuous SIR Model with Delayed Control. Qual. Theory Dyn. Syst. 19, 59 (2020). https://doi.org/10.1007/s12346-020-00395-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12346-020-00395-0