Abstract

In a resource allocation problem, there is a common-pool resource, which has to be divided among agents. Each agent is characterized by a claim on this pool and an individual concave reward function on assigned resources, thus generalizing the model of Grundel et al. (Math Methods Oper Res 78(2):149–169, 2013) with linear reward functions. An assignment of resources is optimal if the total joint reward is maximized. We provide a necessary and sufficient condition for optimality of an assignment, based on bilateral transfers of resources only. Analyzing the associated allocation problem of the maximal total joint reward, we consider corresponding resource allocation games. It is shown that the core and the nucleolus of a resource allocation game are equal to the core and the nucleolus of an associated bankruptcy game.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we analyze a resource allocation model with a common-pool resource in which the sum of the claims of all agents exceeds the total amount of resources. Young (1995) introduced a general framework for the “type” of a claimant: “the type of a claimant is a complete description of the claimant for purposes of the allocation, and determines the extent of a claimant’s entitlement to the good”. In our model, we assume that the claim represents the maximum of resources an agent can handle. Therefore, an agent is never assigned more than this claim. Furthermore, we characterize each agent by an individual strictly increasing, continuous, and concave monetary reward function which allows for monetary compensations among agents, given a certain assignment of resources. This paper generalizes the model in Grundel et al. (2013), where resource allocation problems of this type are considered for agents with linear reward functions. Both models, with linear and concave reward functions, can be viewed as generalizations of the classic bankruptcy model considered in O’Neill (1982). Here, the reward functions are not explicitly modeled, but are implicitly assumed to be strictly increasing and the same for each agent. As common in economic models, allowing for diversity among reward functions and for concavity better incorporates differences in the agent’s evaluations of the common-pool resource.

Our model is applicable for various kinds of common-pool resource problems. For example, consider a common-pool of water, which should be distributed among farmers, large-scale horticultural companies and factories. There is insufficient water to meet the rightful claims of all agents. The possibility of compensating agents who cede water to others monetarily allows agents to interactively reshuffle water supplies to achieve a social optimum. Subsequently, cooperative game theory offers a tool to adequately analyze the resulting joint monetary allocation problem and to determine fair and stable compensations among the agents. Cooperative game theory has been successfully applied to issues in water management before. We refer to Dinar (2007), Ambec and Sprumont (2002), Ambec and Ehlers (2008), Wang (2011), and Brink et al. (2012)) for specific cooperative aspects in international water sharing problems. For an overview, we refer to Parrachino et al. (2006).

In analyzing resource allocation problems, an assignment of resources is called optimal if the total joint monetary reward is maximized. In the setting with concave reward functions, finding optimal assignments are not straightforward. We do not provide a constructive procedure as in Grundel et al. (2013) for the linear setting, but provide a way of checking the optimality of a certain assignment. It is shown that an assignment is optimal if and only if there does not exist a pair of agents for which bilateral transfer of resources can only lead to a lower joint monetary reward. The proof of this characterization is intricate and, interestingly, does not require differentiability of the reward functions. To adequately analyze the corresponding allocation problem of maximal joint monetary rewards, we generalize the resource allocation games as introduced in Grundel et al. (2013) to the setting of concave reward functions. This generalization maintains the idea behind bankruptcy games as introduced by O’Neill (1982) in the sense that, as a consistent benchmark or reference point, the value of a particular coalition of agents reflects the maximum total joint reward that can be derived from the resources not claimed by the agents outside the coalition at hand. Without having to rely on compromise stability as in Grundel et al. (2013), we show that the core and the nucleolus (Schmeidler 1969) of a resource allocation game equal the core and the nucleolus of a corresponding bankruptcy game. The result for the nucleolus relies on Potters and Tijs (1994). As an immediate consequence, the core of a resource allocation game is non-empty. This means that efficient allocations of the maximal joint monetary rewards exist which are stable against coalitional deviations. Moreover, for one such stable allocation, the nucleolus, we provide a closed form expression in the spirit of Aumann and Maschler (1985).

This paper is organized as follows. In Sect. 2, the formal model of resource allocation problems is provided and optimal assignments are characterized. In Sect. 3, we analyze corresponding resource allocation games with special attention to core elements and the nucleolus of these games. Technical proofs are relegated to an Appendix.

2 Resource allocation problems

This section formally introduces resource allocation (RA) problems, and characterizes optimal assignments of resources.

An RA problem considers the assignment of resources among agents who have a claim on a common-pool resource. Let N represent the finite set of agents, \(E\ge 0\) the total amount (estate) of resources which has to be divided among the agents, and \(d\in (0,\infty )^N\) a vector of demands, where for \(i\in N\), \(d_i\) represents agent i’s claim on the estate. It is assumed that \(\sum _{j\in N}d_j\ge E\). Furthermore, for each agent \(i\in N\), there exists a reward function\(r_i\) on \([0,d_i]\) describing the monetary reward to agent i: for every \(z\in [0,d_i], r_i(z)\) denotes the monetary reward for agent i if he is assigned z units of resource. In this paper, it is assumed that for all \(i\in N\), and \(r_i\) is a strictly increasing, continuous, and concave reward function on \([0,d_i]\) with \(r_i(0)=0\). An RA problem will be summarized by (N, E, d, r), with \(r=\{r_i\}_{i\in N}\). The class of all RA problems with set of agents N is denoted by \(RA^{N}\).

Let F(N, E, d, r) denote the set of assignments of resources given by

Therefore, in an assignment, we assume that the complete estate E is assigned among the agents and that no agent can get more than its demand.

Throughout this article, assignments of resources which maximize the total joint monetary reward are considered. The remainder of this section is dedicated to characterizing these optimal assignments.

Let \((N,E,d,r)\in RA^N\). The maximum total joint monetary reward \(v(N,E,d,r)\) is determined by

Note that this maximum exists due to the fact that \(\sum _{j\in N}r_j\) is continuous on a compact domain. Furthermore, Lemma 2 in the Appendix shows that v(N, E, d, r) is concave in the second coordinate E. The set X(N, E, d, r) of optimal assignments is given by

The next theorem characterizes optimal assignments. It tells us that an assignment is optimal if and only if there is no profitable bilateral transfer of resources. For the special case of linear reward functions, a constructive procedure to find optimal assignments was provided by Grundel et al. Grundel et al. (2013). Here, the optimality conditions are more complex, but Theorem 1 offers the possibility to check optimality on basis of bilateral transfers of resources only. This is illustrated in Example 1.

Theorem 1

Let \((N,E,d,r)\in RA^N\) and \(x\in F(N,E,d,r)\). Then, \(x\in X(N,E,d,r)\) if and only if for all \(i\in N\) with \(x_i<d_i\) and for all \(k\in N{\setminus } \{i\}\) with \(x_k>0\), there does not exist a positive \(\epsilon \in (0,\min \{d_i-x_i,x_k\}]\), such that \(r_i(x_i+\epsilon )+r_k(x_k-\epsilon )> r_i(x_i)+r_k(x_k)\).Footnote 1

Proof

We first prove the “only if” part. Let \(x\in X(N,E,d,r)\). Suppose that there exist an \(i\in N\) with \(x_i<d_i\), a \(k\in N{\setminus } \{i\}\) with \(x_k>0\), and an \(\epsilon \in (0,\min \{d_i-x_i,x_k\}]\), such that \(r_i(x_i+\epsilon )+r_k(x_k-\epsilon )> r_i(x_i)+r_k(x_k)\). Now consider \(x'\) such that \(x'_j=x_j\) for all \(j\in N{\setminus } \{i,k\}\), \(x'_i=x_i+\epsilon \), and \(x'_k=x_k-\epsilon \). Note that \(x'\in F(N,E,d,r)\) by construction of \(x'\) and definition of \(\epsilon \). Then

This establishes a contradiction with the optimality of x.

For the “if” part, let \(x\in F(N,E,d,r)\) and \(x\notin X(N,E,d,r)\). We will prove that there exists an \(i\in N\) with \(x_i<d_i\), a \(k\in N{\setminus } \{i\}\) with \(x_k>0\), and an \(\epsilon \in (0,\min \{d_i-x_i,x_k\}]\), such that \(r_i(x_i+\epsilon )+r_k(x_k-\epsilon )>r_i(x_i)+r_k(x_k)\). Let \(x^N\in X(N,E,d,r)\). Clearly, both sets \(A_1=\{i\in N|x^N_i>x_i\}\) and \(A_2= \{k\in N|x^N_k<x_k\}\) are non-empty. Note that for all \(i\in A_1\), it holds that \(x^N_i>0\) and \(x_i<d_i\). Vice versa, for all \(k\in A_2\) it holds that \(x_k>0\) and \(x^N_k<d_k\). The reward functions of agents \(i\in A_1\) and \(k\in A_2\) are outlined in Fig. 1.

By concavity of r it holds that, for all \(i\in A_1\) and \(\epsilon \in (0,x^N_i-x_i]\):

and for all \(k\in A_2\) and \(\epsilon \in (0,x_k-x^N_k]\):

From the fact that \(x^N\in X(N,E,d,r)\), it follows from the only if part that for all \(i\in A_1, k\in A_2\) and \(\epsilon \in (0,\min \{x^N_i,d_k-x^N_k\}]\):

Since \((0,\min \{x^N_i-x_i,x_k-x^N_k\}]\subset (0,\min \{x^N_i,d_k-x^N_k\}]\), subsequently applying (1)–(3) imply that, for all \(i\in A_1\) and \(k\in A_2\) and for all \(\epsilon \in (0,\min \{x^N_i-x_i,x_k-x^N_k\}]\):

Suppose for all \(i\in A_1, k\in A_2\) and \(\epsilon \in (0,\min \{x^N_i-x_i,x_k-x^N_k\}]\), it holds that

Let \(i\in A_1, k\in A_2\) and \(\epsilon \in (0,\min \{x^N_i-x_i,x_k-x^N_k\}]\). Since inequality (1) is an equality, now, we have

By the fact that \(r_i\) is a strictly increasing, continuous, and concave function and \(\epsilon >0\) this tells us that \(r_i\) is linear on \([x_i,x^N_i]\). This is outlined in Fig. 2.

Similarly, we have an equality in (2) which implies that

which tells us that \(r_k\) is linear on \([x^N_k,x_k]\). Finally equality in (3) implies that

By linearity of \(r_i\) on \([x_i,x^N_i]\) and \(r_k\) on \([x^N_k,x_k]\) and the fact that the difference quotient of \(r_i\) on \([x_i,x^N_i]\) equals the difference quotient of \(r_k\) on \([x^N_k,x_k]\), it holds that

Since this holds for all pairs of agents \(i\in A_1\) and \(k\in A_2\), it follows that

As \(x,x^N\in F(N,E,d,r)\) we have \(\sum _{j\in N}x^N_j=\sum _{j\in N}x_j=E\) and, consequently, that \(\sum _{j\in A_1} (x^N_j-x_j)=\sum _{j\in A_2} (x_j-x^N_j)\) which implies

Then

The second equality holds by the fact that for all \(i\in N{\setminus } (A_1\cup A_2), x_i=x^N_i\), the third equality follows from (5).

This implies that \(x\in X(N,E,d,r)\) which establishes a contradiction. Hence, there exists at least one pair of agents \(i\in A_1, k\in A_2\) and \(\epsilon \in (0,\min \{x^N_i-x_i, x_k-x^N_k\}]\), such that

Since \((0,\min \{x^N_i-x_i,x_k-x^N_k\}]\subset (0,\min \{d_i-x_i,x_k\}]\), this finishes the proof. \(\quad \square \)

Example 1

Consider an RA problem \((N,E,d,r)\in RA^N\) with \(N= \{1,2,3,4\}\), estate \(E=7\), and vector of demands \(d=(1,3,4,1)\). The reward functions of the agents are given by

and are drawn in Fig. 3.

An optimal assignment equals \(x=\left( 1,2\frac{1}{2},3\frac{1}{2},0\right) \) with \(r(x)=\left( 9,8\frac{3}{4},5\frac{1}{2},0\right) \), and total joint reward \(23\frac{1}{4}\).

We can use Theorem 1 to check optimality of this assignment. For each pair of agents (i, k), it should hold for all \(\epsilon \in (0,\min \{d_i-x_i,x_k\}]\) that \(r_i(x_i+\epsilon )+ r_k(x_k-\epsilon )\le r_i(x_i)+r_k(x_k)\). From \(\epsilon >0\), \(x_1=d_1\), and \(x_4=0\) it follows, respectively, that \(i\not = 1\) and \(k\not =4\). Theorem 1 with \(k=1\) prescribes that for all \(i\in \{2,3,4\}\) and all \(\epsilon \in (0,\min \{d_i-x_i,1\}]\), it should hold that \(r_i(x_i+\epsilon )+r_1(1-\epsilon )\le r_i(x_i)+r_1(1)\) or equivalently, that

Inequality (6) holds, since we know that for all \(i\in \{2,3,4\}\) and \(\epsilon \in (0,d_i-x_i]\):

and for all \(\epsilon \in (0,1]\)

Theorem 1 with \(k=2\) and \(i=3\) prescribes that for all \(\epsilon \in (0,\frac{1}{2}]\), it should hold that \(r_3\left( 3\frac{1}{2}+\epsilon \right) +r_2\left( 2\frac{1}{2}-\epsilon \right) \le r_3\left( 3\frac{1}{2}\right) +r_2\left( 2\frac{1}{2}\right) \) or equivalently, that

This inequality is satisfied by concavity of \(r_2\) and \(r_3\) and the fact that \(r_2'(2\frac{1}{2})= r_3'(3\frac{1}{2})=1\). Hence

With \(k=2\) and \(i=4\) Theorem 1 prescribes that for all \(\epsilon \in (0,1]\), it should hold that

Inequality (8) hold, since for all \(\epsilon \in (0,1]\)

and for all \(\epsilon \in \left( 0,2\frac{1}{2}\right] \)

The check for optimality of x with \(k=3\) and \(i=2\) is analogous to \(k=2\) and \(i=3\); for \(k=3\) and \(i=4\), we use an argument similar to \(k=2\) and \(i=4\).

Now, consider a subgroup \(S\subseteq N\). For a resource allocation problem \((N,E,d,r)\in RA^N\), the maximum total joint reward of a subgroup \(S\subseteq N\) with \(E'\le E\) and \(E'\le \sum _{j\in S}d_j\) equals \(v(S,E',d|_S,r|_S)\).Footnote 2 The next proposition shows that total maximization implies partial maximization.

Proposition 1

Let \((N,E,d,r)\in RA^N\) and \(x^N\in X(N,E,d,r)\). Then, for all \(S\subseteq N\), it holds that \((x^N_i)_{i\in S}\in X(S,\sum _{j\in S}x^N_j,d|_S,r|_S)\).

Proof

Since \(x^N\in F(N,E,d,r)\), it holds that \((x_i^N)_{i\in S}\in F(S,\sum _{j\in S}x^N_j,d|_S, r|_S)\). Let \(S \subseteq N\). Suppose there exists an \(x^S\in F(S,\sum _{j\in S}x^N_j,d|_S,r|_S)\), such that \(\sum _{j\in S}r_j(x^S_j)>\sum _{j\in S}r_j(x^N_j)\). Let \(x\in \mathbb {R}^N\) be such that for all \(i\in S: x_i=x^S_i\) and for all \(i\in N{\setminus } S:x_i=x^N_i\). By the fact that for all \(i\in N\), it holds that \(x_i\in [0,d_i]\) and

it follows that \(x\in F(N,E,d,r)\). Now

which establishes a contradiction with the fact that \(x^N\) is optimal. \(\square \)

3 Resource allocation games

In this section, we associate with each RA problem a cooperative resource allocation (RA) game. A transferable utility (TU) game is an ordered pair (N, v), where N is the finite set of agents, and v is the characteristic function on \(2^N\), the set of all subsets of N. The function v assigns to every coalition \(S\in 2^N\) a real number v(S) with \(v(\emptyset )=0\). Here, v(S) is called the worth or value of coalition S. Here, the coalitional value v(S) is interpreted as the maximal total joint reward for coalition S when the players in the coalition cooperate. The values \(v(S), S\in 2^N\) serve as reference points on the basis of which allocations of v(N) are considered to be fair or stable. The set of all TU games with set of agents N is denoted by \(TU^N\). Where no confusion arises, we write v rather than (N, v).

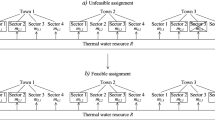

Consider an RA problem (N, E, d, r). We assume that a coalition S can only use the amount of resources D(S), such that all agents outside S obtain resources up to their demand \(d\in \mathbb {R}^N_+\). Let \((S,D(S),d|_S,r|_S)\in \)\(RA^S\) describe the associated resource allocation problem for S, where

Note that \(D(N)=E\). By the fact that \(D(S)\le \sum _{j\in S}d_j\), it follows that \((S,D(S),d|_S,r|_S)\) again is an RA problem.

Corollary 1

Let \((N,E,d,r)\in RA^N\) and let \(S\subseteq N\). Then, \((S,D(S),d|_S, r|_S)\in RA^S\).

In the RA game, \(v^R\) associated with an RA problem (N, E, d, r), the worth of a coalition \(S\in 2^N\) is defined as

Let \(x^S\in X(S,D(S),d|_S,r|_S)\) be an optimal assignment of resources to agents in S. Clearly, \(v^R(S)\) equals the total reward of agents in S associated with \(x^S\). For simplicity, we write v(S, D(S)), rather than \(v(S,D(S),d|_S,r|_S)\), F(S, D(S)), rather than \(F(S,D(S),d|_S,r|_S)\), and X(S, D(S)), rather than \(X(S,D(S),d|_S, r|_S)\).

Notice that the definition of an RA game generalizes the one provided for linear reward functions in Grundel et al. (2013). The coalitional values \(v^R(S), S \in 2^N\), serve as natural benchmark for fairly allocate \(v^R(N)\) among players.

A game \(v\in TU^N\) is called balanced if the coreC(v) of the game is non-empty. The core of a game consists of those allocations of v(N), such that no coalition has an incentive to split off from the grand coalition, that is

For \(v \in TU^N\) and \(T \subseteq N, T \ne \emptyset \), the subgame\(v_{|T} \in TU^T\) is defined by

for all \( S \subseteq T.\) Clearly, \(v_{|N} = v\). A game \(v \in TU^N\) is called totally balanced if every subgame of v has an non-empty core.

Theorem 2

Let \((N,E,d,r)\in RA^N\) with corresponding RA game \(v^R\in TU^N\), and choose \(x^N\in X(N,E)\). Let \(y^N=(r_i(x^N_i))_{i\in N}\). Then, \(y^N\in C(v^R)\).

Proof

First, note that \(\sum _{j \in N}y_j^N = v^R(N)\) by definition. Second, let \(S\subset N\). Then

The second equality follows from Proposition 1. The inequality follows from the fact that \(r_j(z)\) is increasing for all \(i\in S\) and \(\sum _{j\in S}x_j^N\ge D(S)\). This can be seen as follows.

because \(x_i^N\le d_i\) for all \(i\in N\). Since, obviously, \(x^N_i\ge 0\) for all \(i\in N\), also \(\sum _{j\in S}x^N_j\ge 0\), and consequently, \(\sum _{j\in S}x_j^N\ge D(S)\). \(\square \)

By Theorem 2, it follows that RA games are balanced. Furthermore, by Corollary 1, it follows that every RA game is totally balanced.

Corollary 2

Every RA game is totally balanced.

The next lemma and example show that RA games need not be concave in general, but that specific concavity conditions are satisfied. The proof is deferred to the Appendix.

Lemma 1

Let \((N,E,d,r)\in RA^N\) with corresponding RA game \(v^R\in TU^N\). Let \(S,T,U\in 2^N\) be such that \(S\subseteq T \subseteq N{\setminus } U\), \(U\not = \emptyset \), and \(v^R(S)>0\). Then

Example 2

Reconsider the RA problem of Example 1. The corresponding values of D(S), X(S, D(S)) and \(v^R(S)\) are given in the table below.

S | 1 | 2 | 3 | 4 |

|---|---|---|---|---|

D(S) | 0 | 1 | 2 | 0 |

\(x^S\in X(S,D(S))\) | (0) | (1) | (2) | (0) |

\(v^R(S)\) | 0 | 5 | 4 | 0 |

S | 1, 2 | 1, 3 | 1, 4 | 2, 3 | 2, 4 | 3, 4 |

|---|---|---|---|---|---|---|

D(S) | 2 | 3 | 0 | 5 | 2 | 3 |

\(x^S\in X(S,D(S))\) | (1, 1) | (1, 2) | (0, 0) | \((2\frac{1}{2},2\frac{1}{2})\) | (2, 0) | (3, 0) |

\(v^R(S)\) | 14 | 13 | 0 | \(13\frac{1}{4}\) | 8 | 5 |

S | 1, 2, 3 | 1, 2, 4 | 1, 3, 4 | 2, 3, 4 | N |

|---|---|---|---|---|---|

D(S) | 6 | 3 | 4 | 6 | 7 |

\(x^S\in X(S,D(S))\) | \((1,2\frac{1}{2},2\frac{1}{2})\) | (1,2,0) | (1,3,0) | \((2\frac{1}{2},3\frac{1}{2},0)\) | \((1,2\frac{1}{2},3\frac{1}{2},0)\) |

\(v^R(S)\) | \(22\frac{1}{4}\) | 17 | 14 | \(14\frac{1}{4}\) | \(23\frac{1}{4}\) |

Lemma 1 tells us that, e.g., \(v(\{2,4\})-v(\{2\})\ge v(N)-v(\{1,2,3\})\). From \(v(\{1,4\})-v(\{4\})< v(N)-v(\{2,3,4\})\), it follows that if \(v(S)=0\), inequality (9) may be violated.

Now, we derive an explicit expression for the nucleolus (cf. Schmeidler 1969) of RA games. For this, we use some properties of bankruptcy problems and associated bankruptcy games. A bankruptcy problem is a triple (N, B, c), where N represents a finite set of agents, \(B\ge 0\) is the estate which has to be divided among the agents, and \(c\in [0,\infty )^N\) is a vector of claims, where for \(i\in N\), \(c_i\) represents agent i’s claim on the estate, such that \(\sum _{j\in N}c_j\ge B\). For the associated bankruptcy (BR) game\(v_{B,c}\), the value of a coalition S is determined by the amount of B that is not claimed by agents in \(N{\setminus } S\). Hence, for all \(S\in 2^N\)

Recall (cf. Aumann and Maschler 1985) that the nucleolus \(n(v_{E,d})\) of a bankruptcy game \(v_{E,d}\in TU^N\) can be computed as follows:

where

with \(\lambda \) such that \(\sum _{j\in N}\min \{\lambda ,\tilde{d_j}\}=\tilde{E}\).

It turns out that the nucleolus of an RA game coincides with the nucleolus of an associated bankruptcy game. As a consequence, one can derive a closed form expression of an RA game based on CEA. The proof of Theorem 3 uses the important result of Potters and Tijs (1994) that the nucleoli of two games are equal if those games have the same core and one of the games is convex.

Theorem 3

Let \((N,E,d,r)\in RA^N\) and let \(v^R\) be the corresponding RA game. Then

with \(B=v^R(N)\) and \(c=\left( v^R(N)-v^R(N\backslash \{i\})\right) _{i\in N}\).

Proof

Note that (N, B, c) is a BR problem, since \(v^R(N)\ge 0\), and \(C(v^R)\not = \emptyset \), implies that for \(y\in C(v^R)\),

and consequently, \(\sum _{j\in N}c_j\ge \sum _{j\in N}y_j=v^R(N)=B\).

Next, we show that \(C(v^R)=C(v_{B,c})\). Clearly, \(v^R(N)=B=v_{B,c}(N)\). First, we will prove that \(C(v_{B,c})\subseteq C(v^R)\) by showing that for all \(S\in 2^N, v^R(S)\le v_{B,c}(S)\). Let \(S\in 2^N\) and let \(N{\setminus } S=\{i_1,\dots ,i_{|N{\setminus } S|}\}\). Without loss of generality, we can assume that \(v^R(S)>0\). This implies that \(D(S)>0\), and consequently, \(D(N{\setminus } \{i_1\})>0, D(N{\setminus } \{i_1,i_2\})>0\), ..., \(D(N{\setminus } \{i_1,\dots , i_{N{\setminus } S}\})>0\). Furthermore, \(v^R(N{\setminus } \{i_1\})>0\), \(v^R(N{\setminus } \{i_1,i_2\})>0\), ..., \(v^R(N{\setminus } \{i_1,\dots ,i_{N{\setminus } S}\})>0\). For all \(k\in \{0,\dots ,|N{\setminus } S|-1\}\), we have by Lemma 1 that

Since

and

we have that

Using \(v^R(S)>0\), this implies that

Second, to prove that \(C(v^R)\subseteq C(v_{B,c})\), let \(y\in C(v^R)\) and \(S\in 2^N\). Since

and using (10)

we have that \(\sum _{j\in S}y_j\ge v_{B,c}(S)\). It follows that \(y\in C(v_{B,c})\).

Potters and Tijs (1994) proved that for any two games \(v,w\in TU^N\) with \(C(v)=C(w)\) and w convex, we have \(n(v)=n(w)\). From the fact the \(v_{B,c}\) is a BR game, that BR games are convex (Curiel et al. 1987), and \(C(v^R)=C(v_{B,c})\) we conclude that

\(\square \)

Example 3

For the RA game of Example 2, \(v^R(N)=23\frac{1}{4}\) and \((v^R(N)-v^R(N{\setminus } \{i\}))_{i\in N}= \left( 9,9\frac{1}{4},6\frac{1}{4},1\right) \). Theorem 3 implies that \(n(v^R)= \left( 9,9\frac{1}{4},6\frac{1}{4},1\right) - CEA\left( N,2\frac{1}{4},\left( 4\frac{1}{2},4\frac{5}{8} ,3\frac{1}{8},\frac{1}{2}\right) \right) = \left( 8\frac{5}{12}, 8\frac{2}{3}, 5\frac{2}{3}, \frac{1}{2}\right) \).

Notes

\(d|_S\in \mathbb {R}^S\) denotes the restricted vector of demands for agents in S with respect to \(d\in \mathbb {R}^N\); \(r|_S\) refers to \(\{r_j(z_j)\}_{j\in S}\).

References

Ambec S, Ehlers L (2008) Sharing a river among satiable agents. Games Econ Behav 64(1):35–50

Ambec S, Sprumont Y (2002) Sharing a river. J Econ Theory 107(2):453–462

Aumann R, Maschler M (1985) Game theoretic analysis of a bankruptcy problem from the talmud. J Econ Theory 36:195–213

van den Brink R, Van der Laan G, Moes N (2012) Fair agreements for sharing international rivers with multiple springs and externalities. J Environ Econ Manag 63(3):388–403

Curiel I, Maschler M, Tijs S (1987) Bankruptcy games. Zeitschrift für Ope Res 31:143–159

Dinar S (2007) International water treaties: negotiation and cooperation along transboundary rivers. Taylor & Francis, Routledge

Grundel S, Borm P, Hamers H (2013) Resource allocation games: a compromise stable extension of bankruptcy games. Math Methods Oper Res 78(2):149–164

Kuhn HW, Tucker AW (1951) Nonlinear programming. University of California Press, Berkeley

O’Neill B (1982) A problem of rights arbitration from the talmud. Math Soc Sci 2:345–371

Parrachino I, Dinar A, Patrone F (2006) Cooperative game theory and its application to natural, environmental, and water resource issues: 3. Application to water resources. Res Work Pap 1(1):1–46

Potters J, Tijs S (1994) On the locus of the nucleolus. In: Megiddo N (ed) Essays in game theory: in honor of Michael Maschler. Springer, Berlin, pp 193–203

Schmeidler D (1969) The nucleolus of a characteristic function game. SIAM J Appl Math 17:1163–1170

Wang Y (2011) Trading water along a river. Math Soc Sci 61(2):124–130

Young HP (1995) Equity, in theory and practice. Princeton University Press, Princeton

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Lemma 2

Let \((N,E,d,r)\in RA^N\). Then, v(N, E, d, r) is concave in E.

Proof

Let \(A,B\ge 0\), such that \(\sum _{j\in N}d_j\ge A\) and \(\sum _{j\in N}d_j\ge B\). We will prove that for all \(\delta \in [0,1]\), it holds that

Let \(x^A\in F(N,A,d,r)\) be such that \(v(N,A,d,r)=\sum _{j\in N}r_j(x^A_j)\) and let \(x^B\in F(N,B,d,r)\) be such that \(v(N,B,d,r)=\sum _{j\in N}r_j(x^B_j)\). Then

The first inequality follows from concavity of \(r_j\). The second inequality is due to the fact that \(\{\delta x^A_i+(1-\delta )x^B_i\}_{i\in N}\in F(N,\delta A+(1-\delta )B,d,r)\). \(\square \)

Proof

Let \(x^T\in X(T,D(T))\) and \(x^{T\cup U}\in X(T\cup U,D(T\cup U))\). Since \(v^R(S)>0\), we have \(D(S)>0\). Then

Equalities (1), (4), and (9) hold, since \(D(S)>0\), respectively, implies \(D(S\cup U)= D(S)+\sum _{j\in U}d_j\), \(D(T\cup U)=D(S)+\sum _{j\in U}d_j+\sum _{j\in T{\setminus } S}d_j\), and \(D(T)=D(S)+ \sum _{j\in T{\setminus } S}d_j\). Inequalities (2) and (8) follow by the fact that the maximum value decreases if an extra condition is involved in the optimization. Inequality (3) holds by Lemma 2. Equality (5) holds by the fact that \(D(T\cup U)=\sum _{j\in T\cup U}x_j^{T\cup U}\). Equalities (6) and (7) follow from Proposition 1. \(\square \)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Grundel, S., Borm, P. & Hamers, H. Resource allocation problems with concave reward functions. TOP 27, 37–54 (2019). https://doi.org/10.1007/s11750-018-0482-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11750-018-0482-7