Abstract

Many multipoint iterative methods without memory for solving non-linear equations in one variable are found in the literature. In particular, there are methods that provide fourth-order, eighth-order or sixteenth-order convergence using only, respectively, three, four or five function evaluations per iteration step, thus supporting the Kung-Traub conjecture on the optimal order of convergence. This paper shows how to find optimal high order root-finding iterative methods by means of a general scheme based in weight functions. In particular, we explicitly give an optimal thirty-second-order iterative method; as long as we know, an iterative method with that order of convergence has not been described before. Finally, we give a conjecture about optimal order multipoint iterative methods with weights.

Similar content being viewed by others

1 Introduction and Main Results

Solving nonlinear equations is a basic and extremely valuable tool in all fields of science and engineering. Given a function \(f: D\subset \mathbb {C} \rightarrow \mathbb {C}\) defined on a region D in the complex plane \(\mathbb {C}\), one of the most common methods for finding simple roots \(x^{*}\) of a nonlinear equation \(f(x)=0\) is Newton’s method (or Newton–Raphson method) which, starting at an initial guess \(x_0\), iterates by means of

Under suitable conditions, this sequence will converge to the root \(x^{*}\).

For an iterative method \(x_{n+1} = I_f(x_n)\) to find a root \(x^{*}\) of \(f(x) = 0\), an important concept is the order the convergence. We say that the method converges with order \(p > 1\) if, starting at suitable points near the root, we have

For instance, Newton’s method (1) has order 2, and Chebyshev’s method

has order 3. In principle, the larger is the order, the faster is the convergence, i.e., the less iterations are necessary to approximate the root with a desired precision. However, it is important to take into account the cost of each iteration step (measured by the number of evaluations of f and its derivative). This is done by defining the efficiency index. The efficiency index of an iterative method of order p requiring d function evaluations per iteration step is \(E(d,p) = \root d \of {p}\), see [23]. The unproved conjecture by Kung and Traub [18] states that a method which uses d evaluations could achieve, at most, a convergence order \(p = 2^{d-1}\). Methods that reach this bound, whose efficiency index is therefore \(E(d,2^{d-1}) = \root d \of {2^{d-1}}\), are known as optimal. Newton’s method has \(d=2\) and \(p=2\), so it is optimal. Chebyshev’s method has \(d=3\) and \(p=3\), so it is not optimal (there are methods that reach order \(p=4\) using just \(d=3\) evaluations).

Although order 2 (that is, Newton’s method) is in general enough for typical situations, high order methods are very useful for high precision computations, and the theoretical and practical interest of these methods are huge.

Considering Kung-Traub’s conjecture, many optimal two-point methods (3 function evaluations and order 4) and three-point methods (4 function evaluations and order 8) have been presented in the recent mathematical literature. In particular, the paper [7] considers twenty nine optimal eighth-order methods, i.e., their efficiency index is \(\root 4 \of {8} \simeq 1.68179\). Some optimal four-step methods (5 function evaluations and order 16) have been considered also; we will mention them later in this paper.

There are several techniques which can be used to increase the convergence order of an iterative method; see, for instance, [8, 16]. Let us start with an optimal (order 4) two-step iterative method for solving nonlinear equations \(f(x)=0\), namely

Adding an extra Newton evaluation we obtain the three-step scheme

which has order 8 (notice that if an iterative method \(x_{n+1} = F(x_n)\) of order \(\alpha \) is composed with an iterative method \(x_{n+1} = G(x_n)\) of order \(\beta \), the order of the new method \(x_{n+1} = G(F(x_n))\) is \(\alpha \beta \)), but it requires five functional evaluations so it is not optimal.

The idea for obtaining an optimal method is to apply suitable approximations of \(f'(z_n)\) to avoid an evaluation without loss of order. To this end, we can approximate

with \(t_n = \frac{f(y_n)}{f(x_n)}\) and \(s_n = \frac{f(z_n)}{f(y_n)}\) for a suitable function W, which is often called weight function. Thus, writing \({f(x_n)^2}/{(f(x_n)-f(y_n))^2}\) in the central part of Eq. (3) as \(1/(1-t_n)^2\), we have

For instance, this has been analyzed in the interesting paper [8], although not for general W(t, s) but for 3-variable weights \(W(t,s,u) = H(t,s)G(u)\) and \(W(t,s,u) = H(t,s)+G(u)\) with \(u=ts\).

We can put a weight function \(Q(t_n)\) instead of \(1/(1-t_n)^2\) at the central part of Eq. (4) and look for functions Q(t) and W(t, s) for which

is an optimal 4th order method and

is an optimal 8th order method.

At this point, we can prove the following:

Theorem 1

Let \(f:D\subset \mathbb {C} \rightarrow \mathbb {C}\) be a nine times continuously differentiable function with a simple zero \(x^{*}\in D\), and let \(Q:\mathbb {C} \rightarrow \mathbb {C}\) and \(W:\mathbb {C}^2 \rightarrow \mathbb {C}\) be sufficiently differentiable functions on a neighborhood of the origin, with \(Q(0) = 1\) and \(Q'(0) = 2\). If the initial point \(x_{0}\) is close enough to \(x^{*}\), then the method defined by

with \(t_n = {f(y_n)}/{f(x_n)}\) and \(s_n = {f(z_n)}/{f(y_n)}\), converges to \(x^{*}\) with order 8 if the following conditions hold:

We postpone the proof of this theorem to Sect. 4. Of course, as in the case of [8, 19] or [21], the use of symbolic computation packages is of great help to get the proofs (Mathematica version 12 has been used in this paper).

A very simple pair of functions satisfying the conditions of Theorem 1 is \(Q(t) = 1+2t\),

Other choices for Q are \(Q(t) = 1/(1-2t) = \sum _{k=0}^{\infty } 2^kt^k\) or, for \(a \in \mathbb {C}\), the parametric examples

for which suitable functions W can be easily found.

These functions Q and W are just mere examples. Choosing functions Q and W which include another kind or parameters is not difficult, and it allows to create families of parameter-dependent iteration methods, all of them with convergence of order 8, 4 evaluations, and efficiency index \(\root 4 \of {8}\simeq 1.68179\). We can take the Taylor expansions of Q and W and introduce parameters in some terms not involved in (7). In particular, most of the methods of the survey [7] have convergence of order 8 and could be analyzed (with suitable adjustments of the notation) as particular cases of Theorem 1.

Of course, we can try to do the same again. The method

has order 16 with 6 evaluations at every step. Then, the idea is to approximate, in the equation which gives \(x_{n+1}\),

with \(t_n = \frac{f(y_n)}{f(x_n)}\), \(s_n = \frac{f(z_n)}{f(y_n)}\) and \(u_n = \frac{f(w_n)}{f(z_n)}\) without loss of the sixteenth order. In this way, we would have an optimal 16th iterative method, that is, with 5 evaluations at every step and efficiency index \(\root 5 \of {16}\simeq 1.74110\). Actually, there are not many optimal 16th order iterative methods in the mathematical literature, but some of them can be found in [2, 4,5,6, 10, 11, 19, 20, 26,27,28,29,30].

With our strategy of using a weight H(t, s, u), this can be done as follows. We choose the simplest weights Q and W satisfying the conditions (7), i.e., with all the derivatives not involved in (7) equal to 0. This avoids unnecessary complications in the notation and in the computational effort for finding the relations among the derivatives of Q, W, and H.

Let us write the partial derivatives of H(t, s, u) at the origin as

Theorem 2

Let \(f : D\subset \mathbb {C} \rightarrow \mathbb {C}\) be a 17 times continuously differentiable function with a simple zero \(x^{*}\in D\), and let

and \(H:\mathbb {C}^3 \rightarrow \mathbb {C}\) a sufficiently differentiable function on a neighborhood of the origin. If the initial point \(x_{0}\) is close enough to \(x^{*}\), then the method defined by

with \(t_n = {f(y_n)}/{f(x_n)}\), \(s_n = {f(z_n)}/{f(y_n)}\) and \(u_n = {f(w_n)}/{f(z_n)}\), converges to \(x^{*}\) with order 16 if the following conditions hold:

In this theorem, if we take \(H_{i,j,k} = 0\) for all the derivatives not involved in (11) and use the 3-variable Taylor expansion

we get the polynomial

As commented above, several other sixteenth-order iterative methods appear in the mathematical literature. But, as long as we know, no explicitly stated thirty-second-order methods have been given; actually, optimal \(2^d\) methods have been proved to exist for every positive integer d (see [18, 24, 25]), but explicit formulas in closed form have not been provided. Thus, giving a simple thirty-second-order method was a sort of a challenge.

In this paper, the idea is to modify (10) taking an additional intermediate point \(h_n\) instead of \(x_{n+1}\) and then compute \(x_{n+1}\) using a new weight function J defined on a neighborhood of the origin (in \(\mathbb {C}^4\)).

With this aim, we take Q and W as in (9), and H as in (12), which are the “simplest” weights to reach order 16. Let us write the partial derivatives of J(t, s, u, v) at the origin as

Then, we have the following:

Theorem 3

Let \(f : D\subset \mathbb {C} \rightarrow \mathbb {C}\) be a 33 times continuously differentiable function with a simple zero \(x^{*}\in D\), let Q and W as in (9), H as in (12) and \(J:\mathbb {C}^4 \rightarrow \mathbb {C}\) a sufficiently differentiable function in a neighborhood of the origin. If the initial point \(x_{0}\) is sufficiently close to \(x^{*}\), then the method defined by

with \(t_n = {f(y_n)}/{f(x_n)}\), \(s_n = {f(z_n)}/{f(y_n)}\), \(u_n = {f(w_n)}/{f(z_n)}\) and \(v_n = {f(h_n)}/{f(w_n)}\), converges to \(x^{*}\) with order 32 if the following conditions hold:

The proof of this theorem is postponed to Sect. 5. To be honest, we must acknowledge that we do not have a compete mathematical proof, but a rather satisfactory heuristic proof with many computational evidences.

As in the case of (12), if we take \(J_{i,j,k,l} = 0\) for all the partial derivatives not involved in Theorem 3 and use the 4-variable Taylor expansion

we get a polynomial J(t, s, u, v) with integer coefficients.

In general, for finding the roots of a function \(f : D\subset \mathbb {C} \rightarrow \mathbb {C}\) we can think about a multipoint method which, after starting at an initial guess \(x_0\), computes \(x_{n+1}\) from \(x_{n}\) by means of a \((d+1)\)-point iterative scheme with weights of the form

with \(t^{[i]}_n = f(x^{[i]}_n)/f(x^{[i-1]}_n)\), \(i=1,\dots ,d\), and where \(x^{[0]}_n = x_n\). Here, the weights \(W^{[i]}\) must be functions \(W^{[i]} : \mathbb {C}^i \rightarrow \mathbb {C}\) sufficiently differentiable in a neighborhood of the origin.

In this scheme, the case \(d=2\) corresponds to Theorem 1 (4 function evaluations per iteration step and order 8), \(d=3\) corresponds to Theorem 2 (5 evaluations and order 16) and \(d=4\) corresponds to Theorem 3 (6 evaluations and order 32). In general, (14) has \(d+2\) evaluations of f or \(f'\) per iteration step. Then, according to the Kung-Traub conjecture [18], the optimal order of convergence could be, at most, \(2^{d+1}\), and the efficiency index would be \(2^{(d+1)/(d+2)}\).

The papers [18, 24, 25] prove the existence of several families of multipoint optimal methods which attain the convergence order \(2^{d+1}\) using \(d+2\) function evaluations per iteration, but none of these families are based on weight functions (a typical tool for proving the existence is the use of inverse interpolatory polynomial or Hermite interpolation polynomial).

In Theorems 2 and 3, the equations involved in the computation of the Taylor coefficients of the weights are rather complicated, but they always have integer solutions. Based on that, we conjecture the existence not only of families of optimal multipoint methods based on weights, but the following:

Conjecture

For every positive integer d there exists an optimal multipoint method of the form (14) (that is, with order \(2^{d+1}\)) where the weights \(W^{[i]}\) are polynomials (in i variables) with coefficients in \(\mathbb {Z}\).

It is clearly impossible to prove this conjecture using the computer-aided proofs of this paper (Sects. 4 and 5).

Besides this introductory section and the above mentioned sections with proofs, this paper has two additional sections. In Sect. 2 we experimentally measure the 32nd order of convergence given in Theorem 3 by means of several tests; this is always an important point for preventing errors in the proofs or in the numerical implementations. Finally, in Sect. 3 we give a couple of examples showing how the process to increase the order of convergence influences the basins of attraction of the roots for a common nonlinear equation \(f(x)=0\).

Let us finally mention that, in this paper, we have not tried to find a practical method to solve nonlinear equations, or compare its advantages with respect to the many other numerical methods published in the mathematical literature. Instead, the idea has been to explore a theoretical general scheme to find optimal methods (in the Kung-Traub sense), to compare its dynamical behavior through some examples, and to use it for finding the first 32nd optimal method, a nice challenge.

2 Experimental Checking of the Order of Convergence

Given an iterative method, computing experimentally the order of convergence in several examples is a good test to detect theoretical errors in the deduction of the method or practical errors in the implementation of the method in a computer. Using the definition (2) to compute experimentally the order of convergence is not practical; that is why suitable approximations were introduced.

The computational order of convergence (COC), defined in [32], is given by the formula

When \(x^{*}\) is unknown, which is usual in practice (unless the method is checked in a equation whose root is already known), we can use the approximated computational order of convergence (ACOC), defined in [9], as

For a comparison among several convergence orders, see [12].

We are going to compute both COC and ACOC for checking the accuracy of our 32nd-order iterative method (13). This is particularly important in this case, because we do not have a complete proof of Theorem 3 (see more details in Sect. 5). If our numerical experiments find that the method has order 32 for a suitable collection of nonlinear equations, then we will have good empirical evidence in favour of thinking that the result is correct.

We make this kind of numerical experiments using the four test functions \(f_j(x)\), \(j=1,\dots ,4\), given in Table 1. The mathematical literature contains a big amount of examples; for instance, the authors of [14] collected, from previous papers, 125 test functions including our \(f_2\) and \(f_4\). In each case, we arrive at the root \(x^{*}\) starting from the point \(x_0\).

The results of these numerical experiments can be found in Table 2. Notice that, to estimate the COC and the ACOC, it has been enough to use \(n=3\) in (15) and (16) to get excellent approximations of the order of convergence 32 stated in Theorem 3. To obtain high accuracy and to avoid the loss of significant digits, we have employed multi-precision arithmetic with 100, 000 significant decimal digits in the programming package Mathematica.

3 Dynamics of the Methods

Given an iterative scheme F defined in the whole complex plane and a point \(x_0 \in \mathbb {C}\), the orbit of \(x_0\) is the sequence \({\text {orb}}(x_0) = \{x_n\}_{n=0}^{\infty }\) defined by \(x_{n+1} = F(x_n)\). The basin of attraction of \(x^{*} \in \mathbb {C}\) is the set

For a polynomial f and an iterative scheme F for solving the nonlinear equation \(f(x)=0\), it is well known that the boundary among the basins of attraction of different roots of f shows, in general, an intricate fractal structure. Assigning a color to each basin of attraction, we usually get very nice pictures illustrating the behavior of the iterative method F.

In the case of several iterative methods for a common problem \(f(x) = 0\), the visual comparison of the graphics corresponding to the different methods allow to observe some aspects of the behavior of these methods. This has been widely used at least since the paper [31] was published in 2002, in such a way that it is common to use the pictures of the basins of attraction to graphically compare these methods. More recent papers carrying out this kind of studies are [1, 3, 13,14,15, 22]; and, of course, we cannot forget the book [17] and its nice fractal images.

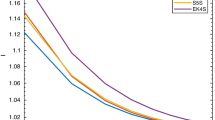

In particular, here we have root-finding iterative methods of order 2 (Newton’s method (1)), order 4 (Eq. (5) with \(Q(t)=1+2t\)), order 8 (Theorem 1), order 16 (Theorem 2) and order 32 (Theorem 3). The aim of this section is to visually compare them when they are applied to the same problem \(f(x)=0\). In practice, let us observe that, although high order methods can be useful for high precision computation, they are much more demanding with respect to the initial point to reach a root of the function f. (Thus, if one wants to use a high order iterative method to obtain a root with a high precision, a good practical idea is to previously approximate the root by applying several initial steps with Newton’s method.)

To illustrate the behavior of the five methods, we use a \(600 \times 600\) grid of the square \(D = [-3,3]\times [-3,3] \subset \mathbb {C}\) and assign a color to each point \(x_0\in D\) according to the simple root to which the corresponding orbit of the iterative method starting at \(x_0\) converges. We mark the point as black if the orbit does not converge to a root in the sense that after at most 25 iterations its distance to any of the roots is larger than \(10^{-3}\). In this way, we distinguish the attraction basins by their color.

We have done this comparison with a couple of functions. First, we have chosen the typical polynomial

of degree three, whose three complex roots are

The pictures in Fig. 1 present the corresponding basins of attraction. Independently of the beauty of the graphics, the situation is as expected: increasing the order of convergence changes the aspect (in the dynamics) of the picture, decreasing the size of the basins of attractions.

Secondly, we have chosen the polynomial

of degree ten, whose ten complex roots are

The basins of attraction are shown in Fig. 2.

4 Proof of Theorems 1 and 2

Proof of Theorem 1

Let us write \(Q_i = \frac{d^iG(t)}{dt^i}\Big |_{t=0}\) and \(W_{i,j} = \frac{\partial ^{i+j} W(t,s)}{\partial t^i \,\partial s^j}\Big |_{(t,s)=(0,0)}\). Then, the conditions in (7) become

Let \(e_{n} := x_{n}-x^{*}\), \(e_{n,y} := y_{n}-x^{*}\), \(e_{n,z} := z_{n}-x^{*}\), and \(c_{\ell } := \frac{f^{(\ell )}(x^{*})}{\ell !\,f'(x^{*})}\) for \(n\in \mathbb {N}\) (recall that \(f'(x^{*}) \ne 0\) because the root \(x^{*}\) is simple). Since \(f(x^{*})=0\), the Taylor expansion of f at \(x^{*}\) yields

and

Therefore, from (17) and (18),

and

For \(f(y_n)\), we have the representation

Then, we obtain from (17) and (19) that

Now, let us use

Then, the central Eq. in (6) gives

where we have already used that \(Q_0=1\) and \(Q_1=2\) to cancel the coefficients of \(e_n^2\) and \(e_n^3\) (this is equivalent to saying that the first two lines of (6), with \(x_{n+1}\) in the place of \(z_n\), provide a fourth-order method; by this reason, we have already fixed \(Q(0) = 1\) and \(Q'(0) = 2\) in the hypothesis of the theorem).

Using the representation

To use the third Eq. in (6), let us expand W at (0, 0), that is,

Substituting these expressions into (6) gives

with

and some expressions for \(R_5\), \(R_6\), \(R_7\) and \(R_8\) that will be considered successively.

By taking

we get \(R_4=0\). Assuming this value, we have

(without the assumption \(W_{0,0}=1\), the expression for \(R_5\) is much more complicated, and the same happens in what follows). The choice

leads to \(R_5=0\) and

Similarly, taking

gives \(R_6=0\) and

Finally, the choices

result in \(R_7 = 0\) and thus the convergence is (at least) of order eight.

Actually, a close look at the representation

shows that it is no longer possible to obtain \(R_8=0\) in general independently of the values \(c_{\ell }\), so the order eight cannot be improved in general. \(\square \)

Proof of Theorem 2

In a mathematical sense, the proof can be done like in Theorem 1, with an intermediate additional point \(w_n\) (instead of \(x_{n+1}\)) in every step of the iterative method, and with the use of the additional weight H to compute \(x_{n+1}\). Of course, we must take the expansions (17) and (18) (and similar expansions) up to \(O(e_n^{17})\), but this is not a significative mathematical difference.

However, from a computational point of view, the computations involved are much larger and demanding of memory and computer time (and the same can be said about the space needed to explain them in a paper). To minimize this and simplify the expressions which appear in the process, we fix Q and W as in (9) (that is, taking all the derivatives not involved in (7) as 0).

Finally, we must have

and find the values of \(H_{i,j,k}\) which make \(R_{\ell } = 0\) for \(\ell =8,\ldots ,15\). Anyway, the mathematical process is very similar, so for the sake of brevity we omit the details.

In practice, it is a good idea to do it in several steps. For instance, we can take (17), (18) and similar expressions only up to \(O(e_n^{10})\), and follow the iterative method only to find the \(H_{i,j,k}\) which make \(R_{8} = 0\), and so on. This strategy requires less computational power, but it allows to proof that (11) guarantees order 16. (Actually, these conditions are also necessary, because otherwise it is impossible to get \(R_8 = \cdots = R_{15} = 0\) independently of the \(c_{\ell }\).) \(\square \)

5 Heuristic proof of Theorem 3

As in Sect. 4, we can try to start taking

with general coefficients \(c_{\ell }\). Then, the original purpose can be to follow a similar process to achieve

and to find the values of \(J_{i,j,k,l}\) which make \(R_{\ell } = 0\) for \(\ell =16,\ldots ,31\). But, now, the computational complexity of the process is much greater than in the proofs of Theorems 1 and 2, and it has not been possible to follow the corresponding symbolic procedure to find the equations \(R_{\ell } = 0\) which we want to solve. This also happens if we try to do it step by step, firstly taking (21) only up to \(O(e_n^{18})\) to find the equation \(R_{16}=0\), and so on.

The idea is, then, to try to simplify the problem, analyzing cases with only a few of the coefficients \(c_{\ell }\) not null. Recalling that \(c_{\ell } := \frac{f^{(\ell )}(x^{*})}{\ell !\,f'(x^{*})}\) with \(f'(x^{*}) \ne 0\), and because we can assume that \(x^{*}=0\), this is equivalent to studying Theorem 3 for functions of the form

and other simple cases (binomials or trinomials). With this kind of simplifications, the symbolic procedure using the weights Q, W, H (prefixed) and a generic weight J with partial derivatives \(J_{i,j,k,l}\) is already computationaly possible.

Now, let us imagine that we follow the procedure with the first example in (22) (that is, only with \(c_2\ne 0\)). Then, we find \(R_{16}\) which will be an expression involving \(c_2\) and some of the \(J_{i,j,k,l}\) (this kind of expressions are not simple, so we do not write it here, but the reader can imagine some like the descriptions of the \(R_{\ell }\) in the proof of Theorem 1). We want to solve the equation \(R_{16}=0\) assigning to the involved \(J_{i,j,k,l}\) values independent of \(c_2\); this gives one or several expressions of combinations of \(J_{i,j,k,l}\)’s that must be null (something like, for instance, the coefficients of \(c_2^2\) and \(c_3\) in \(R_7\), in the proof of Theorem 1). If these equations give a uniquely determined value for a \(J_{i,j,k,l}\), we assign to it this value (of course, this becomes one the conditions in the list of values of Theorem 3). If there still were in \(R_{16}\) some \(J_{i,j,k,l}\) without an assigned value, we could repeat the procedure with another of the examples in (22), to get more combinations of \(J_{i,j,k,l}\)’s that must be null.

Once we have done it with a reasonable quantity of examples like those in (22), we fix a reasonable quantity of coefficients \(J_{i,j,k,l}\) that we can hope are enough to get order of convergence 17 for a general function f. With this procedure, we do not have a mathematical proof for it, but we can check the order using test functions like those of Table 1; if the procedure with the already assigned \(J_{i,j,k,l}\)’s gives COC and ACOC equal to 17 (something like in Table 2), we can assume that we have already succeeded in identifying the conditions for order 17; otherwise, we repeat the process with more examples like in (22) to fix more \(J_{i,j,k,l}\)’s.

Once the previous process has been done for the order of convergence 17, we do it again, that is, we identify a new set of \(J_{i,j,k,l}\)’s to get order 18, and we check it by means of test functions. And so on, up to reach order of convergence 32.

In this way, some weeks of symbolic experiments with Mathematica have allowed to find the suitable values for the \(J_{i,j,k,l}\)’s that appear in Theorem 3, a total of 166 conditions! This has been the most difficult task.

Then, we have not only checked that these conditions give order 32, but also that they are necessary. With this aim, we have checked what happens if we compute COC and ACOC after changing only one of the \(J_{i,j,k,l}\)’s in Theorem 3; in any of those experiments, it turns out that the corresponding COC and ACOC are \(\le 31\). On the other hand, we have also checked what happens if we assign a not null value to any of the \(J_{i,j,k,l}\)’s not involved in Theorem 3 (up to a reasonable degree in the subindex); we have seen that, in all those cases, the corresponding COC and ACOC remain 32, so it is not necessary to fix more \(J_{i,j,k,l}\)’s.

Thus, this is not a complete mathematical proof, but the result is experimentally very well justified and it is not plausible to think that it can be incorrect. (Actually, we have not only used the test functions detailed in Table 1 to experimentally check the order of convergence, but some other ones, which is not necessary to detail.)

Data Availibility

Not applicable.

References

Amat, S., Busquier, S., Plaza, S.: Review of some iterative root-finding methods from a dynamical point of view. Sci. Ser. A Math. Sci. (N.S.) 10, 3–35 (2004)

Babajee, D.K.R., Thukral, R.: On a \(4\)-point sixteenth-order King family of iterative methods for solving nonlinear equations. Int. J. Math. Math. Sci. 2012, 13 (2012)

Basto, M., Abreu, T., Semiao, V., Calheiros, F.L.: Convergence and dynamics of structurally identical root finding methods. Appl. Numer. Math. 120, 257–269 (2017)

Behl, R., Amat, S., Magreñán, Á.A., Motsa, S.S.: An efficient optimal family of sixteenth order methods for nonlinear models. J. Comput. Appl. Math. 354, 271–285 (2019)

Behl, R., Cordero, A., Motsa, S.S., Torregrosa, J.R.: A new efficient and optimal sixteenth-order scheme for simple roots of nonlinear equations. Bull. Math. Soc. Sci. Math. Roumanie (N.S.) 60(108), no. 2, 127–140 (2017)

Behl, R., Gutiérrez, J.M., Argyros, I.K., Alshomrani, A.S.: Efficient optimal families of higher-order iterative methods with local convergence. Appl. Anal. Discrete Math. 14(3), 729–753 (2020)

Chun, C., Neta, B.: Comparative study of eighth-order methods for finding simple roots of nonlinear equations. Numer. Algorithms 74(4), 1169–1201 (2017)

Cordero, A., Lotfi, T., Mahdiani, K., Torregrosa, J.R.: Two optimal general classes of iterative methods with eighth-order. Acta Appl. Math. 134, 61–74 (2014)

Cordero, A., Torregrosa, J.R.: Variants of Newton’s method using fifth-order quadrature formulas. Appl. Math. Comput. 190, 686–698 (2007)

Geum, Y.H., Kim, Y.I.: A biparametric family of four-step sixteenth-order root-finding methods with the optimal efficiency index. Appl. Math. Lett. 24(8), 1336–1342 (2011)

Geum, Y.H., Kim, Y.I.: A biparametric family of optimally convergent sixteenth-order multipoint methods with their fourth-step weighting function as a sum of a rational and a generic two-variable function. J. Comput. Appl. Math. 235(10), 3178–3188 (2011)

Grau-Sánchez, M., Noguera, M., Gutiérrez, J.M.: On some computational orders of convergence. Appl. Math. Lett. 23(4), 472–478 (2010)

Gutiérrez, J.M., Varona, J.L.: Superattracting extraneous fixed points and \(n\)-cycles for Chebyshev’s method on cubic polynomials. Qual. Theory Dyn. Syst. 19(2), 23 (2020)

Herceg, D., Herceg, D.: Eighth order family of iterative methods for nonlinear equations and their basins of attraction. J. Comput. Appl. Math. 343, 458–480 (2018)

Herceg, D., Petković, I.: Computer visualization and dynamic study of new families of root-solvers. J. Comput. Appl. Math. 401, 16 (2022)

Hueso, J.L., Martínez, E., Teruel, C.: Multipoint efficient iterative methods and the dynamics of Ostrowski’s method. Int. J. Comput. Math. 96(9), 1687–1701 (2019)

Kalantari, B.: Polynomial Root-Finding and Polynomiography. World Scientific, Singapore (2009)

Kung, H.T., Traub, J.F.: Optimal order of one-point and multipoint iteration. J. Assoc. Comput. Mach. 21, 634–651 (1974)

Lotfi, T., Soleymani, F., Sharifi, S., Shateyi, S., Khaksar Haghani, F.: Multipoint iterative methods for finding all the simple zeros in an interval. J. Appl. Math. 2014, 13 (2014)

Maroju, P., Behl, R., Motsa, S.S.: Some novel and optimal families of King’s method with eighth and sixteenth-order of convergence. J. Comput. Appl. Math. 318, 136–148 (2017)

Matthies, G., Salimi, M., Sharifi, S., Varona, J.L.: An optimal three-point eighth-order iterative method without memory for solving nonlinear equations with its dynamics. Jpn. J. Ind. Appl. Math. 33(3), 751–766 (2016)

Neta, B., Scott, M., Chun, C.: Basins of attraction for several methods to find simple roots of nonlinear equations. Appl. Math. Comput. 218(21), 10548–10556 (2012)

Ostrowski, A.M.: Solution of Equations and Systems of Equations, 2nd edn. Academic Press, New York (1966)

Petković, M.S.: On a general class of multipoint root-finding methods of high computational efficiency. SIAM J. Numer. Anal. 47(6), 4402–4414 (2010)

Petković, M.S., Petković, L.D.: Families of optimal multipoint methods for solving nonlinear equations: a survey. Appl. Anal. Discrete Math. 4(1), 1–22 (2010)

Sharma, J.R., Argyros, I.K., Kumar, D.: On a general class of optimal order multipoint methods for solving nonlinear equations. J. Math. Anal. Appl. 449(2), 994–1014 (2017)

Sharma, J.R., Arora, H.: Efficient Ostrowski-like methods of optimal eighth and sixteenth order convergence and their dynamics. Afrika Matematika 30(5–6), 921–941 (2019)

Sharma, J.R., Guha, R.K., Gupta, P.: Improved King’s methods with optimal order of convergence based on rational approximations. Appl. Math. Lett. 26(4), 473–480 (2013)

Sharma, J.R., Kumar, S.: Efficient methods of optimal eighth and sixteenth order convergence for solving nonlinear equations. SeMA J. 75(2), 229–253 (2018)

Soleymani, F., Shateyi, S., Salmani, H.: Computing simple roots by an optimal sixteenth-order class. J. Appl. Math. 2012, 13 (2012)

Varona, J.L.: Graphic and numerical comparison between iterative methods. Math. Intelligencer 24(1), 37–46 (2002)

Weerakoon, S., Fernando, T.G.I.: A variant of Newton’s method with accelerated third-order convergence. Appl. Math. Lett. 13(8), 87–93 (2000)

Acknowledgements

The author is supported by grant PGC2018-096504-B-C32 from MINECO/FEDER.

Author information

Authors and Affiliations

Contributions

Not applicable.

Corresponding author

Ethics declarations

Conflict of interest

The author declares that they have no conflict of interest.

Code Availability

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Varona, J.L. An Optimal Thirty-Second-Order Iterative Method for Solving Nonlinear Equations and a Conjecture. Qual. Theory Dyn. Syst. 21, 39 (2022). https://doi.org/10.1007/s12346-022-00572-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12346-022-00572-3

Keywords

- Multipoint iterative methods

- Simple root

- Order of convergence

- Kung and Traub’s conjecture

- Basins of attraction